Currently sitting on an issue which i can't solve due to my lack of mathematical knowledge.

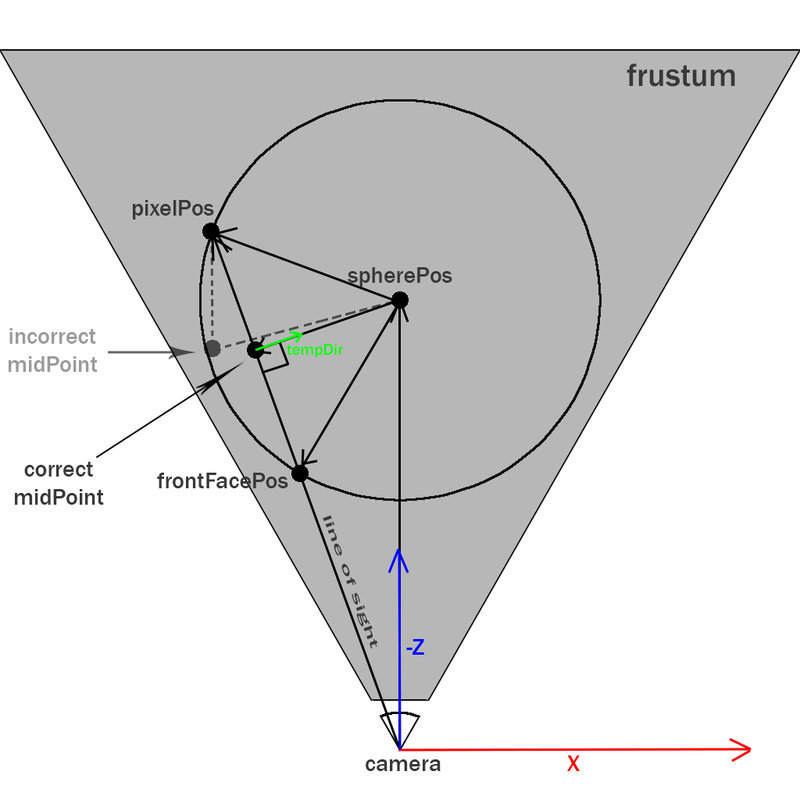

Here is a picture i made which sums up what i'm trying to do:

To put it simply:

I render spheres (simple 3D meshes) into the scene on a seperate FBO by using frontface culling (so that the backside is rendered. The red part of the sphere on the screenshot.)

Now, in the fragment shader i can access the depth value of the rendered pixel by using "gl_Fragcoord.z". Now what i want to do is to calculate the depth value of the front facing side of the sphere of the exact same pixel. (so that i have a min and max depth value in order to know what the start depth and end depth value of the sphere on the given pixel is.) I need those values for post processing purposes.

My attempt to solve this was:

- pass the current vertex position into the fragment shader

- subtract the vertex position from the origin point (in view space) to retrieve a normal pointing from the origin to the backface point

- Mirror the z-component of this normal (as we are in view space)

- add the mirrored normal to the origin point which gives us the front facing (vertex) position of the sphere

- use this position to calculate the depth value like in an openGL depth buffer. (haven't done this properly.)

I may or may not have an error in my shader code. (Maybe the way i multiply matrices is wrong?)

Here is my current code (a bit messy but i tried to comment it.)

//-------------- Vertex Shader --------------------

#version 330

layout (location = 0) in vec3 position;

layout (location = 1) in vec3 normal;

layout (location = 2) in vec4 color;

layout (location = 3) in vec2 uv;

uniform mat4 uProjectionMatrix;

uniform mat4 uModelViewMatrix;

out vec4 oColor;

out vec2 vTexcoord;

out vec4 vFragWorldPos;

out vec4 vOriginWorldPos;

out mat4 vProjectionMatrix;

void main()

{

oColor = color;

vTexcoord = uv;

//coordinates are in view space!

vec4 tFragWorldPos = (uModelViewMatrix * vec4(position,1.0));

vec4 tOriginWorldPos = (uModelViewMatrix * vec4(0.0,0.0,0.0,1.0));

vFragWorldPos = (uModelViewMatrix * vec4(position,1.0));

vOriginWorldPos = (uModelViewMatrix * vec4(0.0,0.0,0.0,1.0));

gl_Position = uProjectionMatrix * uModelViewMatrix * vec4(position,1.0);

//send projection matrix to the fragment shader

vProjectionMatrix = uProjectionMatrix;

}

//---------------- Fragment Shader --------------

#version 330

in vec4 oColor;

in vec2 vTexcoord;

out vec4 outputF;

in vec4 gl_FragCoord;

uniform sampler2D sGeometryDepth;

in vec4 gl_FragCoord;

in vec4 vFragWorldPos;

in vec4 vOriginWorldPos;

in mat4 vProjectionMatrix;

void main()

{

//get texture coordinates of the screenspace depthbuffer

vec2 relativeTexCoord = vec2(gl_FragCoord.x,gl_FragCoord.y);

relativeTexCoord = relativeTexCoord-0.5+1.0;

relativeTexCoord.x = relativeTexCoord.x/1280.0;

relativeTexCoord.y = relativeTexCoord.y/720.0;

//depth

float backDepth = gl_FragCoord.z;//back depth

float geometryDepth = texture2D(sGeometryDepth,relativeTexCoord).r;//geometry depth

//--------------- Calculation of front depth---------------

//get distance from origin to the fragment (normal in viewspace)

vec3 offsetNormal = (vFragWorldPos/vFragWorldPos.w).xyz-(vOriginWorldPos/vOriginWorldPos.w).xyz;

//mirror depth normal (z)

offsetNormal.z*=-1.0;

//add normal to origin point in order to get the mirrored coordinate point of the sphere

vec4 sphereMirrorPos = vOriginWorldPos;

sphereMirrorPos.xyz += offsetNormal.xyz;

//apply perspective calculation

vec4 projectedMirrorPos = vProjectionMatrix * sphereMirrorPos;

projectedMirrorPos/=projectedMirrorPos.w;

//TODO: CALCULATE PROPERLY

float frontDepth = projectedMirrorPos.z * 0.5 + 0.5; // no idea what do do here further

//want to color only the pixels where the scene depth (provided by a screen space texture which is a depthbuffer of a different FBO)

//is exactly in between the min/max depth values of the sphere

if(backDepth>geometryDepth && frontDepth<geometryDepth){

outputF = vec4(1.0,1.0,1.0,1.0);

}else{

discard;

}

}

I suspect that maybe transforming the coordinates into viewspace in the vertex shader (and working with those coordinates) may be an issue. (No idea where/when to divide by "W" for example.) Also i'm currently stuck at the part where i have to calculate the depth values in the same range as the OpenGL depth buffer in order to compare them in the if statement shown at the end of the fragment shader.

Hints/help would be greatly appreciated.