Something I've wanted to do for a very long time is to create an engine for rendering solar systems and planets of realistic scale. Over the past 3 months I built the technology required to make it happen. I had to completely overhaul my game engine to deal with the problem of massive scale.

Computers use 32-bit floating point numbers for most calculations, but those numbers can only represent about 16 million steps accurately, which means that for an earth-sized planet you only get about 1 meter precision (obviously not enough!). If you were to use floats for everything, it would jitter like crazy and physics would blow up. Double precision can help, but it is slower and there is already a lot of effort invested in single-precision code (e.g. physics).

To solve this, I have to use a hierarchy of local reference frames, where each object's position is relative to its reference frame, rather than to some world origin. This allows for scenes of any size, but makes everything a game engine does much more complicated.

I had to rewrite the graphics renderer to deal with the precision by rendering the scene in "slices" at different depths, and by treating all object coordinates as a unit vector + distance offset from the camera. Far away objects are scaled down around the camera to a manageable size. This also requires scaling lighting calculations for everything to look right. Shadows are a nightmare that I haven't completely solved.

To do physics in such a situation is also difficult because the physics engine needs to be aware that every object is potentially in a different reference frame, yet still needs to handle collisions and contact properly between those objects. I had to deeply integrate the concept of reference frames into the physics engine for this to work. I don't think this would be possible with an off-the-shelf physics engine.

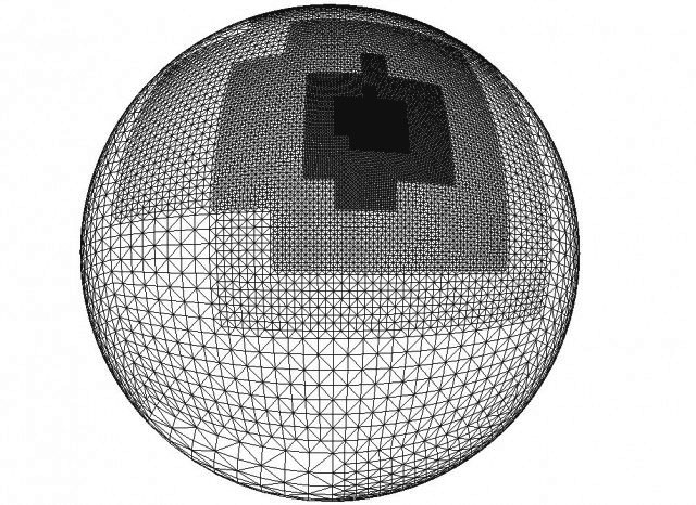

https://gamedev.stackexchange.com/questions/149762/quad-sphere-subdivision-algorithm

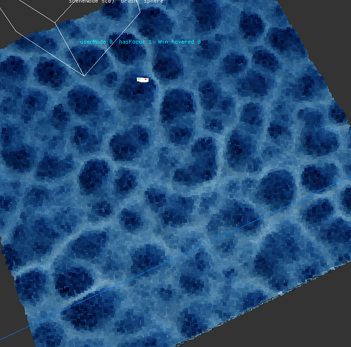

Then I had to work on the planet generation itself, which is non-trivial. Planets are split into a hierarchy of quadrilateral tiles on each face of a cube that is projected onto the sphere. Tiles are recursively refined in detail in order to satisfy the requirements of observer(s) in the scene. Each tile is generated from its parent tile by a 2-step process: interpolate (upsample) a quarter of the parent tile, then add noise to increase the detail.

The noise itself must be generated coherently across the entire sphere in a deterministic way. This means that the noise for every tile is initialized with a random seed that is derived from the tile's address (location) on the cube-sphere. For continuity, the tile borders (edges and vertices) need to have the same noise as the neighbors, so there also needs to be a way to uniquely identify tile edges and vertices at any level of detail. There is also some overlap with adjacent tiles which is necessary for continuity during interpolation, and for calculation of correct surface normals.

“Square-square” interpolation (ACM Siggraph, Miller 1986) is used to get smooth cubic? interpolation of the parent noise.

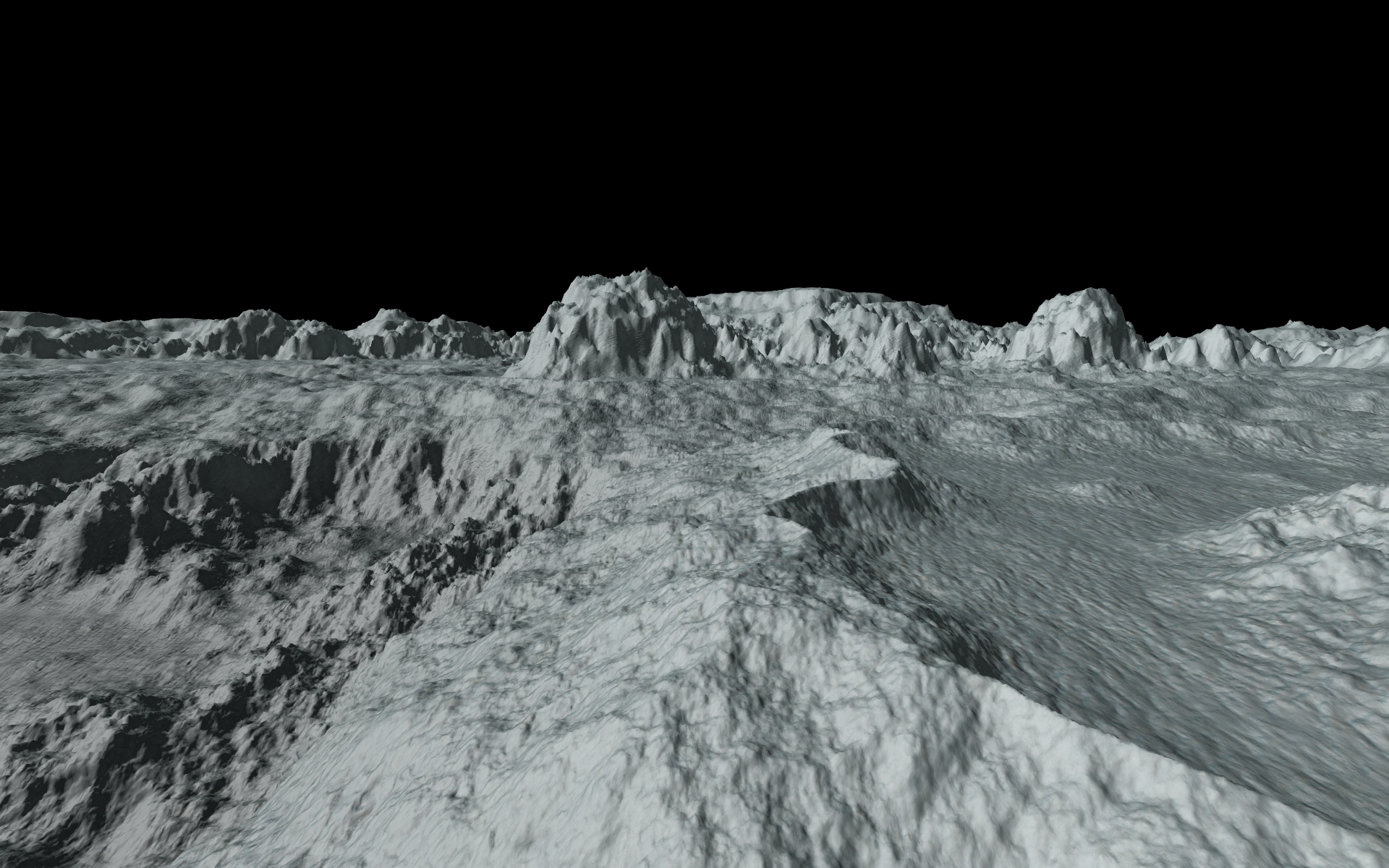

The resulting fractal noise is transformed using some functions to achieve a desired terrain appearance, and this is used as the elevation for the tiles. The elevation is converted to a triangle mesh, projected from cube to sphere, and then offset relative to the tile's local reference frame.

Texturing is done using a tri-planar texture mapping technique in the local space of the tile vertices. (I tried world space first but this was broken when changing reference frames). To avoid texture tiling artifacts, I use a "fractal" distance-based texture scaling that blends the texture at two different scales.

There's still a ton of stuff to do to make the fidelity better of the generation and rendering. I don't have any sky/atmosphere rendering, nor any stars, nor water, nor any objects (i.e. rocks) placed on the surface. Eventually, I want to do simulation of the planetary geology (tectonic simulation), and a hierarchical simulation of erosion. These will be used with a complex model of materials to achieve more realistic terrain than other systems (e.g. No Man's Sky), with rock strata of various types, and as many other geological features as I can model efficiently.

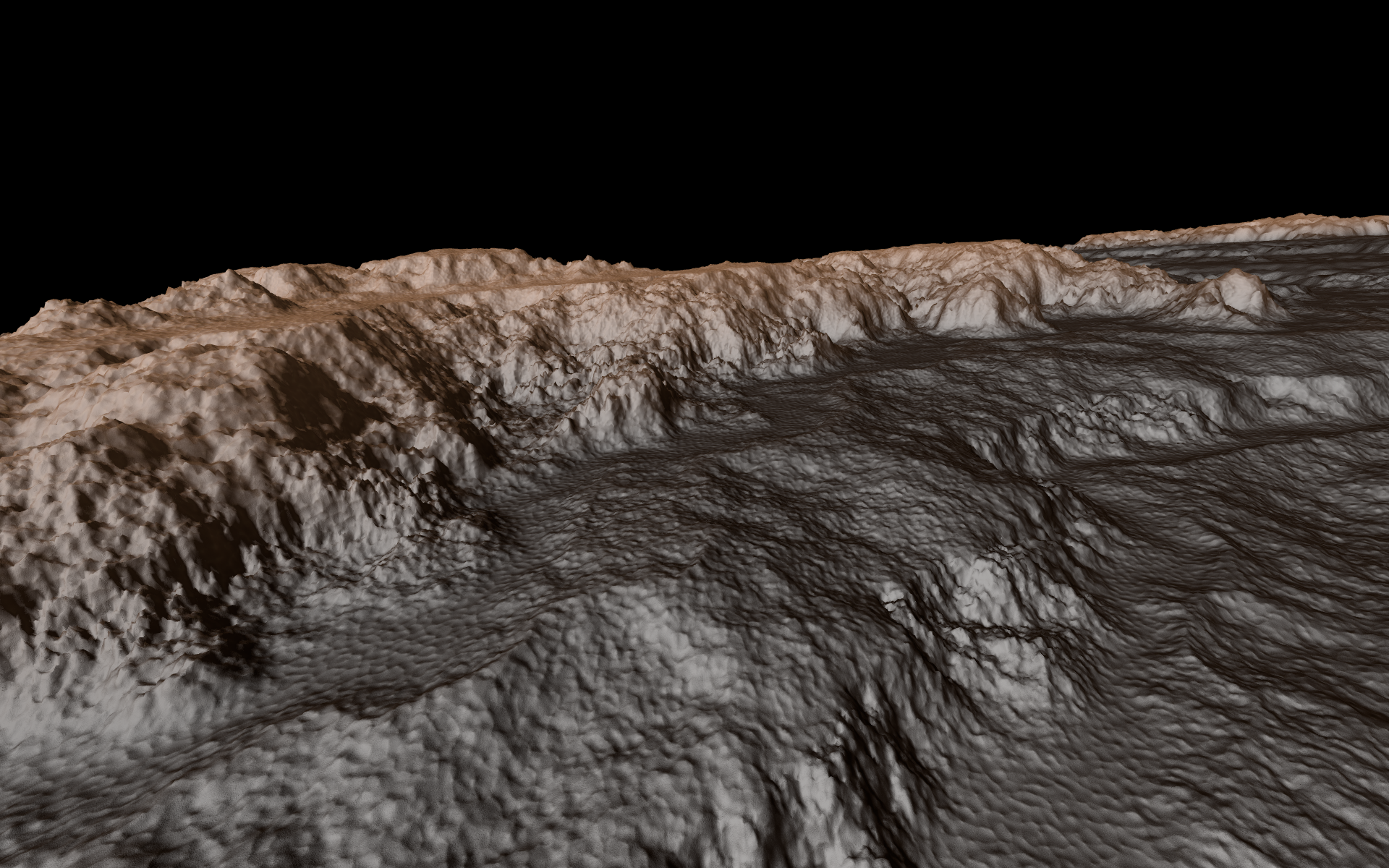

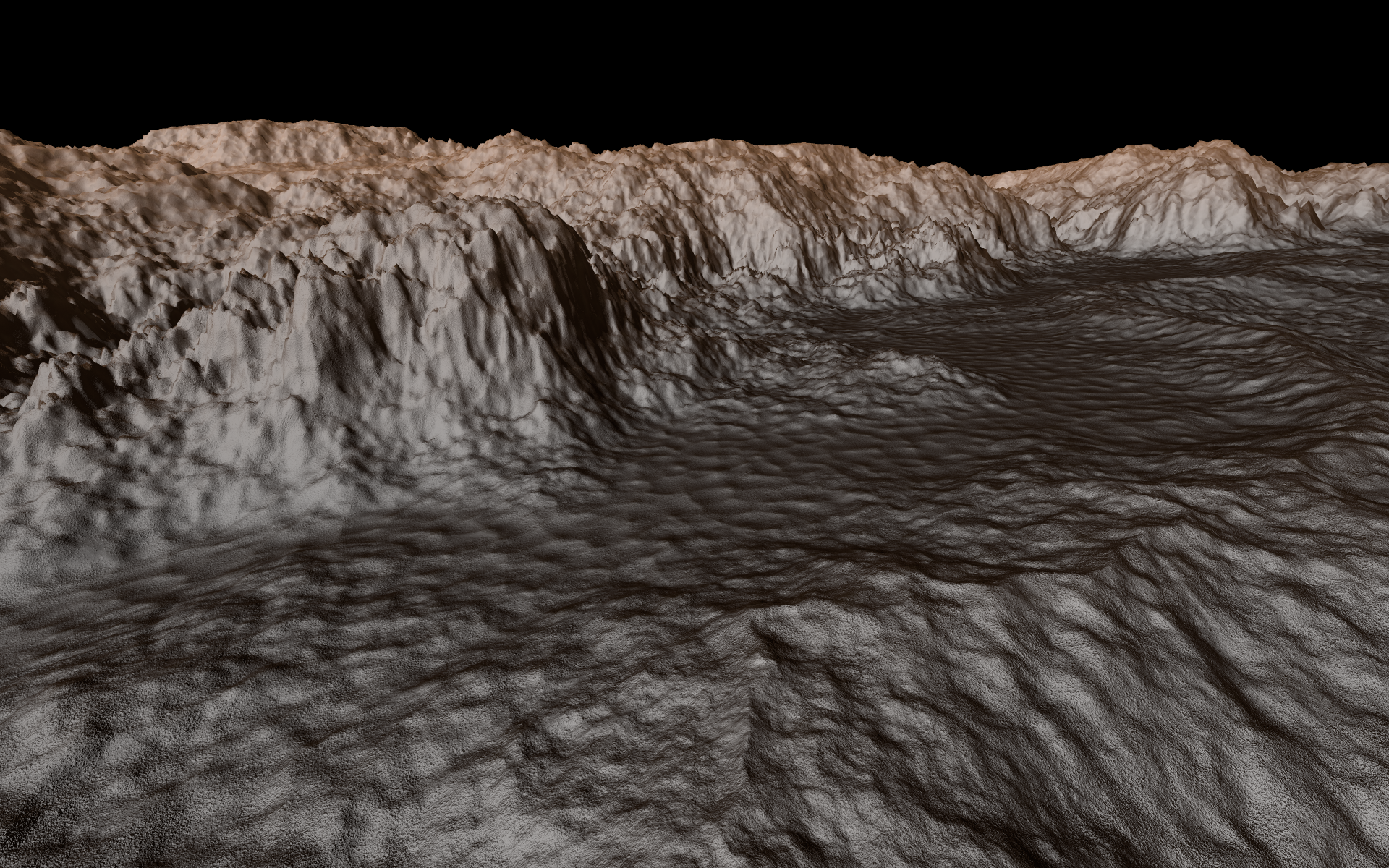

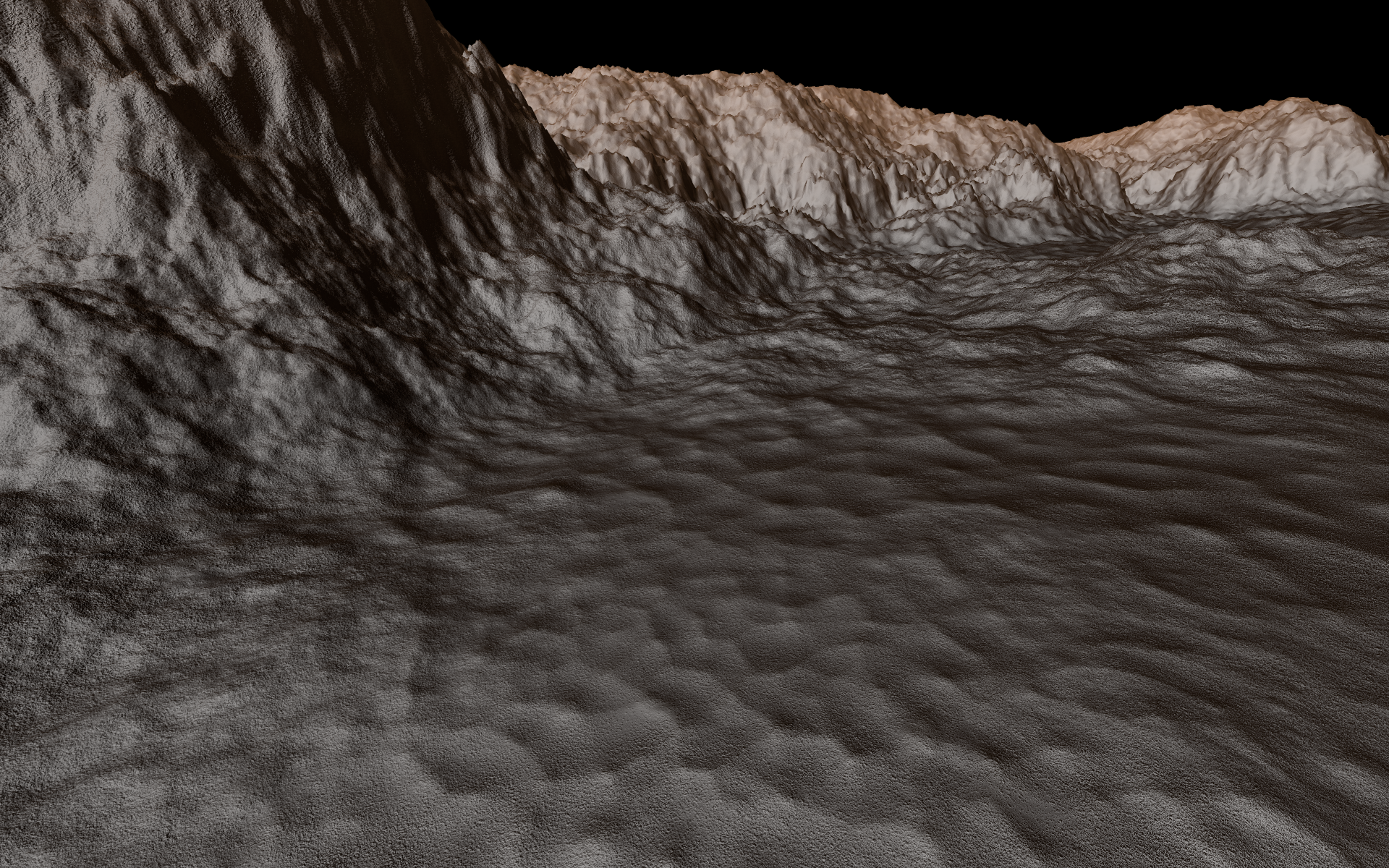

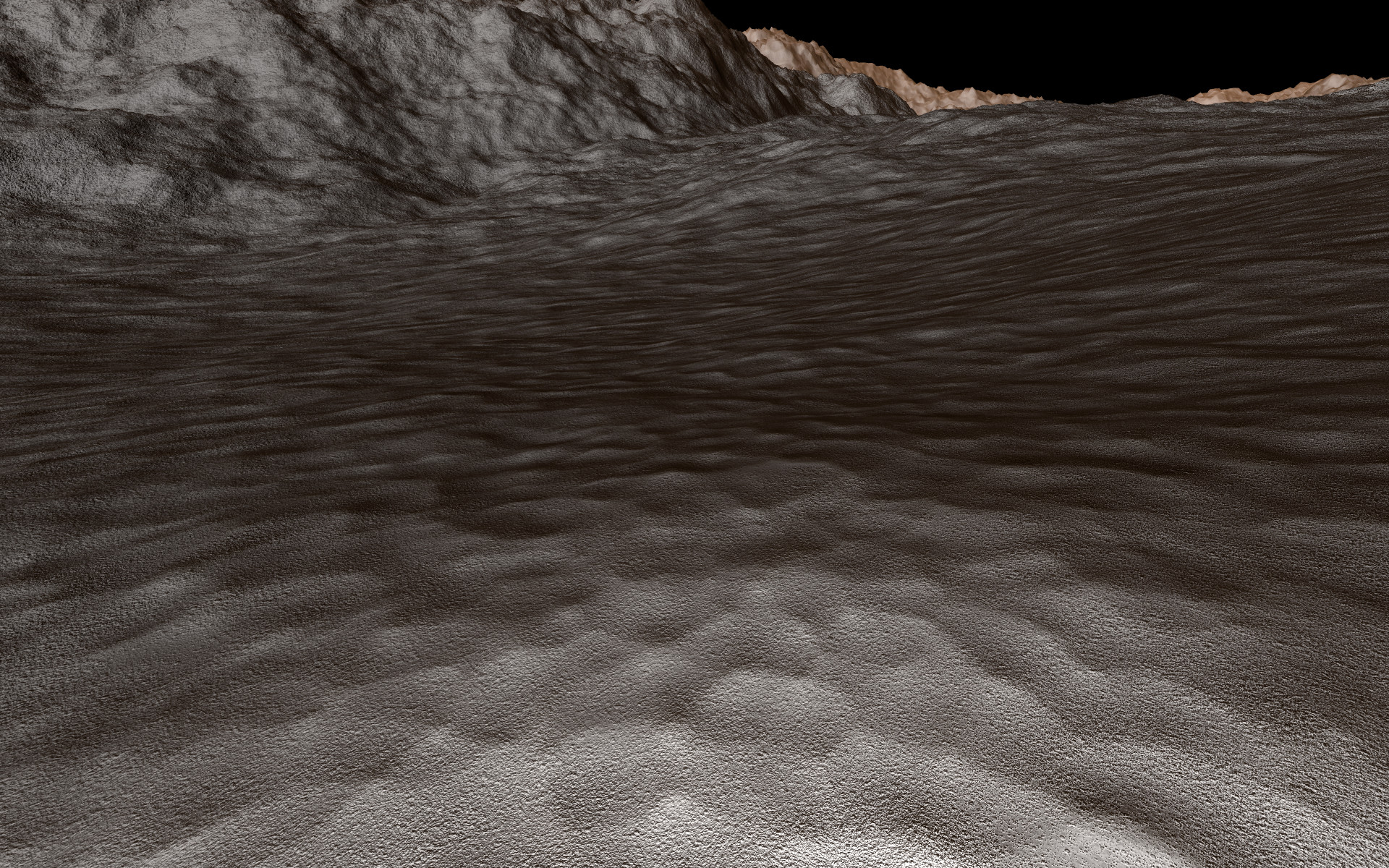

Zoom sequence from space to surface:

I always think that's what most of us have in common. :D

Nice results. I like the smooth / sand dunes alike flat ground vs. the rocky mountains.

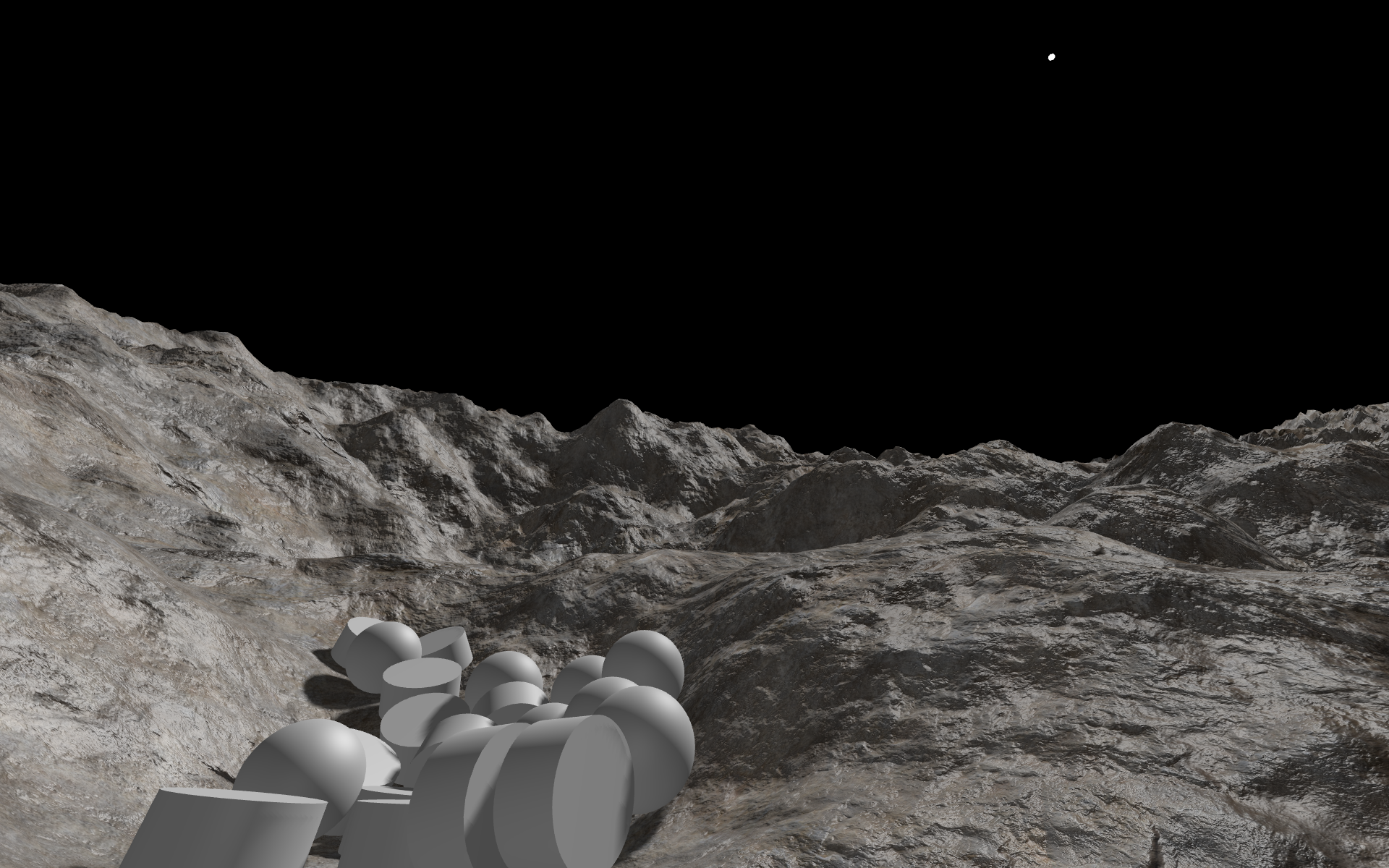

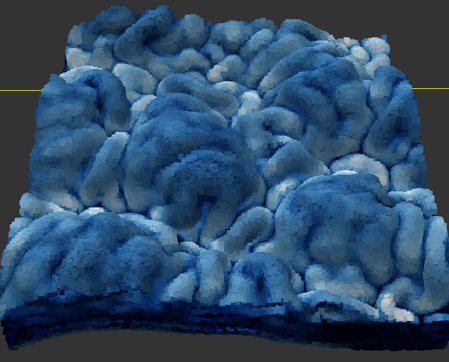

That's what i've worked on for much too long already. Here's current results at some medium detail level:

Maybe that's the first large scale terrain simulation which is actually full 3D. : ) At least i've not found anything else, not even research papers.

Likely i have to work on forming caves or arches to demonstrate the true 3D advantages, but currently i'm happy it finally starts to look realistic.

For still unknown reasons, erosion in 3D is much harder than with height maps. I had to try dozens of methods until something worked well enough.

And it's orders of magnitudes slower than heightmaps. Doing all steps to make this image takes >30min i guess. To get down to ‘human scale’, i'll likely need days of over night processing. Assuming my HDD is big enough… ; )

But iirc, something like Gaea takes a second to make a high quality 1024^2 terrain on GPU. So maybe you could do this in the background while flying towards the planet. With procedural upscaling for close ups.

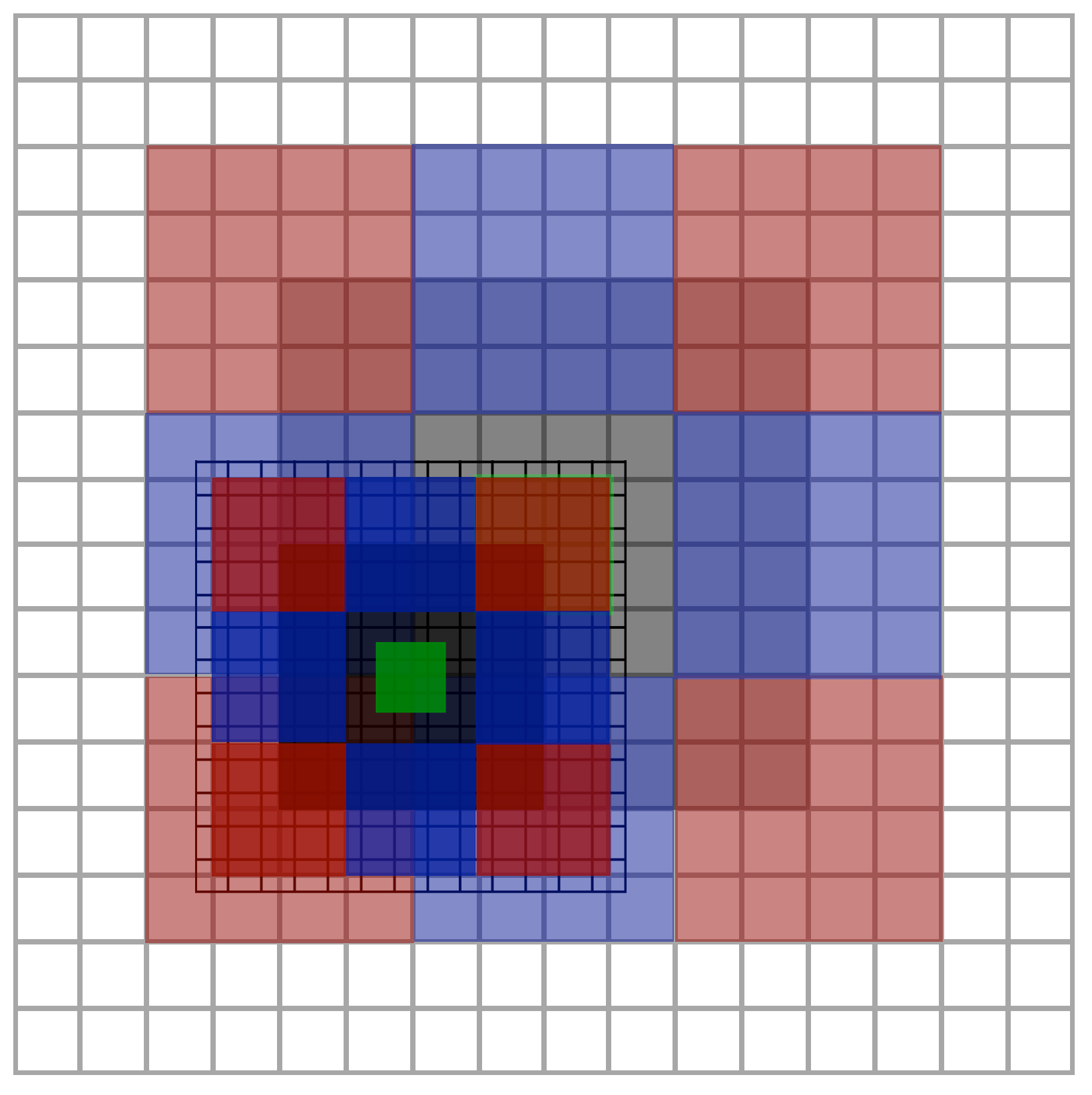

I did 2D sim as well, to learn how it works, and got nice results:

Few seconds on single threaded CPU. And you want lower frequencies first, then upscale and simulate again to add detail. So this process would match the requirements you have while getting closer to a planet.

I think it would be worth it, because purely procedural generation using noise alone always feels a bit boring and repetitive.

Just a bit of simulations might be able to give huge improvements… :D