This article has since been revised and updated.

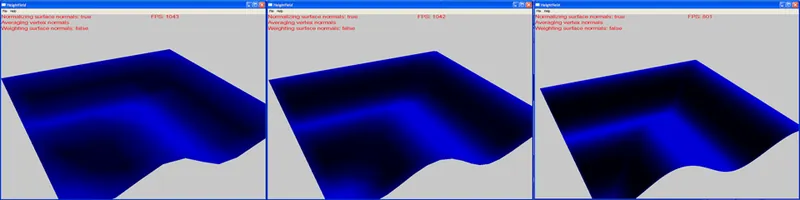

Img1. Hi-Res heightfield rendered with weighted surface normals averaged into a vertex normal

A few months ago I returned to the GameDev.net community after having been away for a few years working on projects in the industry. I've since then spent a good amount of time in the beginners and DirectX forums answering people's questions the best I can. One question which I've seen come up many times is how to compute the normals of a heightfield for lighting calculations. After answering the question three or four times I decided to put together this little article. I hope everyone finds it useful.

The goal of this post is to explain the most common algorithms used for computing surface and vertex normals. After a survey of the most common algorithms we'll take an in-depth look at the two most common algorithms. These in-depth looks will include a logical analysis of why the method is effective, how the math can be simplified based on what we know about heightfields, and the relative performance of the particular algorithm. By the end of the article the reader should be comfortable with computing normals as they pertain to heightfields and be familiar with which method would be best suited for the desired look and performance.

Symbols and Notation

Throughout the rest of the article I will use the following notation to indicate parts of mathematical expressions. Additionally, it should be noted that the absence of an operator between a variable and a number or between two variables should be interpreted as multiplication.

Bold: Bold items are always vectors.

| VecA | Vertical bars indicate "magnitude of" whatever is inside. I.e. The magnitude of Vector A

2 * B The * indicates multiplication of two terms, but will often just be written 2B

B / 2 The slash operator is used for division of two terms

A × B The "cross" indicates cross-product of two terms

A · B The "dot" indicates the dot product of two terms

? Theta is a Greek letter used in this article to represent an angle

? Sigma is a Greek letter used in this article to indicate the summation of many terms

? Delta is a Greek letter used in this article to indicate a "change in" some variable

Demo Attached

The performance calculations, results, and discussions throughout this article are based on the empirical evidence I gathered through research of my own heightfield normal calculations and renderer. Links to download the demo, source code, and a listing of hot keys can be found in Appendix A. Any corrections, comments, or correspondence regarding the demo or this article can be sent to Jeromy Walsh (jwalsh) via a PM here on GameDev.net. Additionally, the source code for this demo is provided as open source. Feel free to modify and/or distribute it for your own purposes. I ask only that you leave the copyright info at the top of the files.

Quick History of Normal Calculations of Polyhedra

Different methods and who pioneered them

The study of computer graphics is still relatively new and has only been an in-depth field for about 40 years. Among the mathematicians and computer scientists who pioneered the field is French Computer Scientist Henri Gouraud. Gouraud is best noted for his research in Smooth shading, often called Gouraud Shading. In 1971 Gouraud released a book titled "Continuous Shading of Curved Surfaces." In the book Gouraud describes the most common method for computing normals. In the remainder of the article we will refer to his algorithm as "Mean Weighted Equally" (MWE). In essence, Gouraud suggested that if you were to compute the normals of each of the facets (surfaces) of a polyhedron then you could get relatively smooth shading by then taking all of the facets which are "attached" to a single vertex and averaging the surface normals. In this way, each surface normal contributes equally to the vertex normal – the vector representing the normal to the polyhedron at that vertex.

Gouraud's algorithm would remain the defacto algorithm for computing per-vertex lighting for more than 20 years. In 1997 however, two German Computer Scientists Thürmer & Wüthrich, proposed a new method for computing normals which was mathematically more accurate. Their algorithm, which is referred to as "Mean Weighted by Angle" (MWA), suggested that facets which are "attached" to a vertex normal should have their contribution to the vertex normal weighted by the angle of the triangle that the vertex is part of. In other words, all three vertices of a triangle have the same surface normal, however, the surface normal can be multiplied by each of the angles of the triangle in order to create three new normals which are weighted versions of the surface normal. These new normals could then be used to compute vertex normals in place of the surface normals used previously.

Not long after Thürmer & Wüthrich proposed their new algorithm a man named Nelson Max published his findings in the Journal of Graphics Tools describing four additional algorithms. I will list these here for brevity, however experiments have been done which show these methods are neither as accurate as MWA or as fast as MWE. For this reason, we will not be exploring these methods further. "Mean Weighted by Sine and Edge Length Reciprocal," "Mean Weighted by Areas of Adjacent Triangles," "Mean Weighted by Edge Length Reciprocals," and "Mean Weighted by Square Root of Edge Length Reciprocals."

Heightfields

What are heightfields used for?

Heightfields serve one primary purpose in video games – to act as the terrain upon which your actors move around and experience the world. To this degree, depending upon the genre of game you're making, players may spend a large amount of time looking at the terrain. With this in mind, it is often desirable to have a terrain which is realistic and attractive to look at. The most effective way to make this possible is by the use of lighting, shadows, textures, etc. Since we're focused primarily on lighting in this article, I'll leave the other elements for another time. Let's take a more detailed look at heightfields.

Heightfield general principles

At the most basic level a heightfield is a one or two dimensional array of data which represents the height of a piece of terrain at a specific (x, z) point in space. In other words, if you were to look at the [x][z] offset within a 2d array or compute the associated index into a one-dimensional array, the value at that location in memory would be the height at point X, Z. The index into a one dimensional array can be computed with the following equation, where numVertsWide is how many vertices are on your terrain in the x dimension:

index = z * numVertsWide + x

The value in memory can be stored as either a floating point value or an integer. In the case of an integer, the values are often stored as a 1 byte (8 bits) or 4 byte (32 bits) block of memory. When the height values are stored as 8-bit integers the heightfield can easily be saved and loaded to disk in the form of a grayscale bitmap. This makes it possible to use a standard image editing application to modify the terrain offline. The same can be done with a 32 bit value, but the alpha value and colors become less intuitive to work with in an image editing program.

When the value in memory is stored as an 8 bit integer it is also common to multiply the height values by a vertical scale component. This enables the heightfield to cover elevations of greater and lower than 255. Depending on the resolution of your terrain, however, 8 bit integers can often seem blocky and unrealistic. For the purpose of this article and demo, we will use 32 bit, single precision Floating Point values to represent height.

In addition to having a vertical scale factor in the case of 8 bit heightfield, it is also common in all cases to have a horizontal scale factor. The reason for this is quite simple. When using indices for your x,z pairs it would force all of your vertices to reside along 1 meter (world units) intervals (i.e. X = 0, 1, 2, 3….Z = 0, 1, 2, 3). As with using 8 bit values for height, forcing your vertices to reside 1 meter apart could cause the terrain to seem unrealistic in many cases. The horizontal scale factor, sometimes called "Scale" or "Units Per Vertex" allows your vertices to be spaced in distances greater or smaller than 1.0. For example, if your scale factor is 0.5f, then all of your vertices would be 0.5 meters apart, leading to more vertices in the same amount of space, and causing your terrain to be a higher "Resolution." It should be noted here that "Scale" and "Resolution" are inversely proportional. As your scale increases, causing a greater distance between your vertices, your resolution, or the complexity of the terrain, decreases.

Finally, although we represent a heightfield as a single array of floating point values in this article, it is common for culling and rendering purposes to break a large heightfield up into smaller "tiles." If this is what you're trying to do, you can think of the heightfield in this article as being a single tile of the larger system. Ultimately, tiling your heightfield means creating a mapping between tiles and vertices, and also extending your normal calculations to take into account the heights of vertices in tiles adjacent to the current tile. You will see later that our algorithm for computing surface normals already takes tiling into account, and with little modification you can get these algorithms to work within a tiled terrain system.

Normal Calculations for Heightfields

Method 1: Mean Weighted Equally (MWE)

Mean Weighted Equally is a two stage algorithm for computing the normal at a vertex in a heightfield (vertex normal). The first stage in the process is to compute the surface normals of each of the triangles in the heightfield using cross-product calculations. The computed surface normals can either be normalized or not, depending on the quality of vertex normals you are looking for. The second stage of the process is to add up all of the surface normals attached to a given vertex and then either average the resultant vertex normal or normalize it. The following two equations are given as the mathematical definitions for the surface and vertex normals.

Surface Normal: Ns = A × B

Vertex Normal: Nnwe = - (Nsi) / 6.0f | i = 0…5

The equation for the surface normal above can be read as "The Surface Normals is equal to the vector A crossed with the vector B" where A and B are two vectors that lie in the plane of the surface.

The equation for the vertex normal above can be read as "The vertex normal is the sum of all surface normals which this vertex is a part of, divided by 6, where the surface normal index is in the range of 0 to 5, inclusive. I should take a moment to note that in Gouraud's algorithm he does not strictly state that there are up to 6 surfaces connected to a single vertex, however this is a mathematical certainty for a heightfield where all of the triangles are oriented the same direction. As well, based on the algorithm we use to implement the above equation each vertex will have exactly 6 surfaces connected to it. I do this as a simplification to help in the computation of vertex normals and to speed up the processing. Now that we've got the equations defined, let's take a closer look at each one, see how they apply to our heightfield, and see if we can't make the equations more efficient.

Computation of surface normals

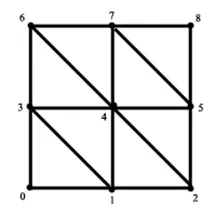

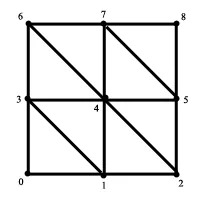

The equation above for surface normals requires first and foremost two vectors A and B which lie in the plane of the surface. For the purpose of this article we can assume we're using a heightfield with vertices laid out as in the diagram to the right.

In the diagram to the right each quad is made up of two triangles, one in the lower left of the quad and one in the top right. For the sake of this article you can assume that we're using DirectX's default left-handed coordinate system and that triangles are oriented positively in a clock-wise ordering. You can also assume that our heightfield lies in the xz-plane, and that y is the coordinate of height. From the diagram you can see that quad 0 is made up of triangles 0-3-1 and 1-3-4. Mathematically speaking a plane or surface can be computed from three points using the cross-product of the vectors formed by two of the edges of the triangle.

Equation for a Normal Vector from a Left-Handed Triangle:

A = P1 – P0; B = P2 – P0

N = A × B

As an example, given triangles 031, 134, and height values as a function of (x,z) pairs you can compute the normals to the triangles with the following equations:

// Triangle 0 Normal

A = 3 – 0; A = ( 0, h3, 1 ) – ( 0, h0, 0 )

B = 1 – 0; B = ( 1, h1, 0 ) – ( 0, h0, 0 )

N0 = [ ( 0, h3, 1 ) – ( 0, h0, 0 ) ] × [( 1, h1, 0 ) – ( 0, h0, 0 ) ]

Optionally normalize N0

// Triangle 1 Normal

A = 3 – 1; A = ( 0, h3, 1 ) – ( 1, h1, 0 )

B = 4 – 1; B = ( 1, h4, 1 ) – ( 1, h1, 0 )

N1 = [ ( 0, h3, 1 ) – ( 1, h1, 0 ) ] × [ ( 1, h4, 1 ) – ( 1, h1, 0 ) ]

Optionally normalize N1

The above equations illustrate how surface normals can be computed for a single quad of the heightfield, however, this is a special case and we need a general form of the equation if we're going to apply it to the terrain as a whole. In general, we'd like an equation that uses i, and j notation, instead of specific x,z pairs to allow us to iterate over the entire terrain and maintain the same equation. So given that i is an index in the range of 0 to numVertsWide – 1 and j is an index in the range of 0 to numVertsDeep – 1, let's see if we can't establish a general equation that works in code such as:

for( j = 0; j < numVertsDeep; j++ )

for( i = 0; i < numVertsWide; i++ )

{ ... } For the triangle in the bottom left, hereafter referred to as Triangle 0, let P0 be the point in the bottom left, P1 be the point in the top left and P2 be the point in the bottom right. If P0, P1, and P2 were a function of the current [i, j] pair, the points could be represented as follows:

P0 = [ i, h0, j ]

P1 = [ i, h1, j+1 ]

P2 = [ i+1, h2, j ]

// Triangle 0

A = P1 – P0

B = P2 – P0

Now that we can represent any point on the heightfield as a function of ‘i' and ‘j', let's re-examine the equation for the normal. Even though we are rephrasing the equations with (i, j) coordinates, the pairs will drop out leaving us with simpler calculations at the end.

// Compute the two vectors used in the cross product

A = P1 – P0; A = (i, h1, j+1) – (i, h0, j); A = ( 0, h1 - h0, 1 )

B = P2 – P0; B = (i+1, h2, j) – (i, h0, j); B = ( 1, h2 - h0, 0 )

// Compute the normal to Triangle 0, using the generic form i,j and the full definition of cross-product

N0 = A × B

N0 = [ AyBz ? AzBy, AzBx – AxBz, AxBy – AyBx ]

N0 = [ ( 0, h1 - h0, 1 ) ] × ( 1, h2 - h0, 0 ) ]

N0 = [ 0( h1 - h0 ) – 1( h2 - h0 ), 1(1) – 0(0), 0( h1 - h0 ) – 1( h1 - h0 ) ]

N0 = [ h0 - h2, 1.0f, h0 - h1 ]

In the equation above the cross product of two orthogonal vectors can be simplified dramatically. The calculation of a cross product normally requires 6 multiplications and 3 subtractions, however once we've computed it to the end we see that many of the terms drop out and that only 2 subtractions are required. Before you reach for the D3DXVec3Cross() function remember that DirectX knows nothing about the nature of your vectors. In this case, based on the orthogonal nature of our vectors it is far faster to compute the cross product ourselves than to let DirectX do it for us. Now that we've computed the cross product of Triangle 0 in general form, let's quickly compute the cross product of Triangle 1 using generic form. Once we're done we'll have the two equations necessary to iterate over the entire heightfield and compute surface normals.

// The 3 points used to compute the top right triangle

P0 = [ i+1, j ]

P1 = [ i, j+1 ]

P2 = [ i+1, j+1 ]

// Compute the two vectors used in the cross product

A = P1 – P0; A = ( i, h1, j+1 ) – ( i+1, h0, j ); A = ( -1, h1 - h0, 1 )

B = P2 – P0; B = ( i+1, h2, j+1 ) – ( i+1, h0, j ); B = ( 0, h2 - h0, 1 )

N0 = [ AyBz ? AzBy, AzBx – AxBz, AxBy – AyBx ]

N0 = [( -1, h1 - h0, 1 ) ] × ( 0, h2 - h0, 1 ) ]

N0 = [ 1( h1 - h0 ) – 1( h2 - h0 ), 1(0) – (-1)(1), (-1)( h2 - h0 ) – (0)( h1 - h0) ]

N0 = [ h1 - h2, 1.0f, h0 - h2 ]

As with the equation for Triangle 0, Triangle 1 also simplifies down to 2 subtractions as one might expect. You are now armed with everything you need to compute the surface normals for every triangle on the heightfield. Simply iterate over the quads, and for each quad compute the two surface normals using the equations above. Before we look at the code for computing the surface normals, lets quickly evaluate the range over which we will iterate.

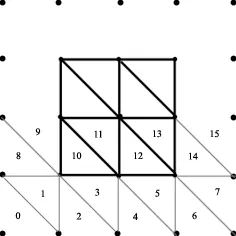

A heightfield will have numVertsWide vertices in the x-direction and numVertsDeep in the z-direction. You could iterate from 0 to numVertsWide – 1 and from 0 to numVertsDeep -1, and that would give you every triangle on the heightfield. Although this would initially seem like a good idea, there is a better way. Instead of computing those ranges, imagine that there is a heightfield that is one size larger than your own in all directions. That is, if you were to place your heightfield inside of this larger heightfield, as indicated in the diagram below, you would see that there is one extra row and column of quads on each edge. The purpose of those extra quads will become clear when we get into vertex normal calculations. For now, just make sure that you allocate enough space to store the extra surface normals and initialize them to the up ( 0.0, 1.0, 0.0 ) vector. Listed below is the code from my demo for computing the surface normals. You will see that although I iterate over a larger range of surfaces I do not actually calculate the normals for those extra quads. I want those quad's surfaces to remain with their normals facing the Up direction. I still iterate through the range, however, because I want my indices to update so that I store my surface normals in the correct place in the array. Follow along:

voidHeightfield::ComputeSurfaceNormals( void )

{

INT normalIndex = 0;

// Iterate through the z components

for( int z = -1; z <= m_NumVertsDeep - 1; z++ )

{

// Iterate through the x components

for( int x = -1; x <= m_NumVertsWide - 1; x++ )

{

// Does the current x,z lie on the terrain, or just outside?

if( !IsValidCoord( x, z ) || !IsValidCoord( x+1, z ) || !IsValidCoord( x, z+1 ) || !IsValidCoord( x+1, z+1 ) )

{

normalIndex += 2; continue;

}

// Set/Compute the normal for triangle 0

float height0 = GetHeight( x, z );

float height1 = GetHeight( x, z+1 );

float height2 = GetHeight( x+1, z );

D3DXVECTOR3 normal1( height0 - height2, 1.0f, height0 - height1 );

// Set/Compute the normal for triangle 1

height0 = height2;

height2 = GetHeight( x+1, z+1 );

D3DXVECTOR3 normal2( height1 - height2, 1.0f, height0 - height2 );

// Set the surface normals in the array

m_pSurfaceNormals[normalIndex++] = normal1;

m_pSurfaceNormals[normalIndex++] = normal2;

}

}

} Computation of vertex normals

The second stage of Gouraud's normal algorithm is calculation of vertex normals. The second stage is far easier than the first stage and is less based on mathematics than on logic. Computing the vertex normals is as simple as taking the adjacent surfaces to the current vertex, adding them up, and dividing by 6. The hard part here is not the division, but instead determining which of the surface normals we computed above are adjacent to the current triangle. The equation for computing vertex normals is:

Vertex Normal: Nnwe = ? (Nsi) / 6.0f | i = 0…5

In order to determine which of the surfaces are adjacent to the current vertex let's once again take a look at the image to the right. In the drawing to the right each of the vertices has a different number of surrounding surfaces. Vertex 0 for example has only one surface adjacent to it. Vertex 1 has three surfaces adjacent, two in the top left, and one in the top right. Vertex 2 has two adjacent surfaces to its upper left. And vertex 4 has six adjacent surfaces – three above it, and three below it. In order to create an algorithm in this example that accurately computes the vertex normal of every vertex we would have to take into account each of the special cases. Is the vertex on an edge – top, left, bottom, right? Is it on a corner – top left, top right, bottom left, or bottom right? Such an algorithm would first have to compute conditional statements to determine which of the above statements is true. Once the condition of the vertex was known it would likely progress through a series of if-statements where certain adjacent surfaces would be summed. There's got to be a cleaner way!

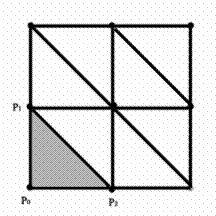

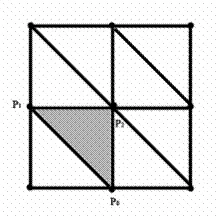

The primary reason we have this problem is because the heightfield has edges. If the heightfield had no edges then all vertices would have 6 surface normals such as the middle vertex to the image to the right. In which case, it might be possible to create an algorithm that reliably determined the six surrounding surfaces for any given vertex. So let's remove the edges from the heightfield! If you recall from the section above on computing the surface normals I illustrated how you should add an extra row and column of quads to each edge. The quads would not be updated but would merely have the UP vector as its normal. The reason we did that is so we could change the previous image to the one just below.

In the image to the right we no longer have edges to our heightfield because of the additional sets of quads. Every vertex on our heightfield now has 6 surrounding surfaces. Based on the direction of our triangles, each vertex has two surfaces to the top left, one to the top right, one to the bottom left, and two to the bottom right. Now imagine there was a small square around any given vertex. The square would be a part of 6 surfaces. If you slide the square to the left, right, up, or down, it is always located in six triangles. Therefore, we will call this algorithm The Sliding Six Algorithm.

The idea behind the algorithm is to put the rectangle in the bottom left corner, determine which surfaces it's part of, and then add those surfaces to a list to be summed for the current vertex index. Let's try it with a few vertices to help you get the hang of it.

Vertex 0

The triangle to the bottom left is triangle 1

The triangle just below it is triangle 2

The triangle to the bottom right is triangle 3

The triangle to the top right is triangle 8

The triangle just above it is triangle 9

The triangle to the top right is triangle 10.

Vertex 1

The triangles on the bottom from left to right are 3, 4, and 5

The triangles on the top from left to right are 10, 11, 12

Vertex 2

The triangles on the bottom from left to right are 5, 6, 7

The triangles on the top from left to right are 12, 13, 14

I wanted to get three vertices above because I wanted you to see the pattern evolve. Each row of triangles is sequential from one vertex to the next. Vertex 0 had surfaces 1, 2, and 3, where vertex 1 had surfaces 3, 4, 5 and so on. This would have continued all the way across the heightfield. The same was true for the top row. So if you could get a starting index for each vertex, we'll call it the triangleIndex, then we'd just need to start with that index and move along two to the right, and the corresponding three to the top and we'd be done. The only question would be how to get the surface which is immediately above the triangle index. That can be obtained from the following equation:

rowOffset = 2( numVertsWide + 1 ) - 1;

Here's the algorithm as implemented in the attached demo:

void Heightfield::ComputeVertexNormals( void )

{

D3DXVECTOR3 vec[6];

D3DXVECTOR3 vertexNormal;

INT triIndex = 1;

INT indexPlusOne = 2;

INT indexPlusTwo = 3;

INT vertIndex = 0;

// Computes the offset based on the number of triangles across

INT rowOffset = ( ( m_NumVertsWide + 1 ) * 2 ) - 1;

for( INT z = 0; z < m_NumVertsDeep; z++ )

{

for( INT x = 0; x < m_NumVertsWide; x++ )

{

indexPlusOne = triIndex + 1;

indexPlusTwo = triIndex + 2;

// Get the three triangles below the vertex

vec[0] = m_pSurfaceNormals[ triIndex ];

vec[1] = m_pSurfaceNormals[ indexPlusOne ];

vec[2] = m_pSurfaceNormals[ indexPlusTwo ];

// Get the three triangles above the vertex

vec[3] = m_pSurfaceNormals[ rowOffset + triIndex ];

vec[4] = m_pSurfaceNormals[ rowOffset + indexPlusOne ];

vec[5] = m_pSurfaceNormals[ rowOffset + indexPlusTwo ];

// Sum the vectors and then divide by 6 to average them

vertexNormal = vec[0] + vec[1] + vec[2] + vec[3] + vec[4] + vec[5];

vertexNormal /= 6.0f;

m_pVertexNormals[vertIndex] = vertexNormal;

triIndex += 2;

vertIndex++;

}

triIndex += 2;

}

}

Method 2: Mean Weighted by Angle

As with the algorithm above, Mean Weighted by Angle (MWA) is a two stage algorithm. The first stage is to compute the surface normals, much as in the algorithm above, except that each surface normal is post-processed before it is used in the computation of vertex normals. Unlike in MWE, the surface normals MUST be normalized or artifacts may appear in the lighting. The second stage of the process is mostly the same as it was in MWE, sum up the associated surface normals and then divide them by 6. Here is the modified formula used in computing the weighted surface normal:

Surface Normal: Ns = -(A ×B)

Since computation of the vertex normal is mostly the same in this algorithm as it is in MWE, we will not cover it again. For specific implementation details and to see how it was slightly modified to access the weighted surface normals see the code in the attached demo.

Computation of surface normals

The first part of the algorithm for MWA is to compute the surface normals, exactly as we did with MWE. After we have computed the surface normal for a given triangle, however, we must post-process it by multiplying it with all three angles of the surface triangle. In doing so we will have created three new "weighted" surface normals that will replace the single surface normal used before. We already know how to get the surface normal so let's explore how to get the angle. The following equation is a commonly used method for getting the angle between two vectors:

Definition of Cross-Product:

?A × B? = ?A??B?sin(?)

? ? sin-1( ?A × B? / ?A??B? )

The above equation, which is generally used as the definition of a cross-product can be re-written as the line just below it. The line below it tells us that the angle between vectors A and B can be determined by taking the Inverse Sine of the Magnitude of the Cross-Product between A and B, and dividing all that by the magnitude of vector A multiplied by the magnitude of vector B. As mentioned above, we already have the cross-product of a specific A and B for the given triangle, however we also need the magnitude of every edge of the triangle. The next step is to determine the most efficient way to compute the magnitude of a vector. The following equations derive the general form of computing the magnitude for the vector which lies along the left edge of Triangle 0.

?V0? = ?( ( ?Vx )2 + ( ?VY )2 + ( ?VZ )2 )

?V0? = ?( ( i – i )2 + ( h1-h0 )2 + ( j+1-j )2 )

?V0? = ?( ( 02 + ( h1-h0 )2 + ( 1 )2 )

?V0? = ?( ( h1-h0 )2 + 1 )

Through simplification we have reduced the magnitude of the vector down to a single multiply, addition, square, and square root. This is NOT an inexpensive equation. The square kills the performance of this algorithm as you will see later. Although we've computed the magnitude for the left edge of the triangle, there are still two more edges, each which have their own magnitudes. Below are the simplified equations for the magnitude of the two other edges of Triangle 0.

?V1? = ?( ( h2-h1 )2 + 2 ) // Hypotenuse of Triangle 0

?V2? = ?( ( h2-h0 )2 + 1 ) // Bottom edge of Triangle 0

As with the equations for normal calculations, the orientation of Triangle 1 is slightly different and as such, has slightly different magnitude equations as well. Here are the equations for the magnitude of the three edges of Triangle 1.

?V0? = ?( ( h1-h0 )2 + 2 ) // Hypotenuse of Triangle 1

?V1? = ?( ( h2-h1 )2 + 1 ) // Top edge of Triangle 1

?V2? = ?( ( h2-h0 )2 + 1 ) // Right edge of Triangle 1

With the six equations above you have the ability to compute the magnitude of each of the edges of both the bottom left and top right triangles of every quad on the heightfield. The next thing to do is to plug them into the equation for Theta(angle) above, and compute the angle of each corner of the triangles. We will do Triangle 0 here for demonstration, Triangle 1 will be left up to the reader to prove, however the source code will be provided.

? ? sin-1( ?A × B? / ?A??B? )

// Compute the angle in the bottom left corner of Triangle 0

?V0? = ?( ( h1-h0 )2 + 1 ) // Left edge of Triangle 0

?V2? = ?( ( h2-h0 )2 + 1 ) // Bottom edge of Triangle 0

? ? sin-1( ?Ns? / ? V0?? V2? ) where Ns is the already computed normal to the surface of the triangle

To compute the weighted surface normal for the vertex in the bottom left corner of Triangle 0 do the following:

Nw0 = ? * Ns

Listed here is the modified code for computing surface normals which iterates over every quad on the heightfield and computes the weighted surface normals for Triangle 0 and Triangle 1.

void Heightfield::ComputeWeightedSurfaceNormals( void )

{

INT normalIndex = 0;

// Iterate through the z components

for( int z = -1; z <= m_NumVertsDeep - 1; z++ )

{

// Iterate through the x components

for( int x = -1; x <= m_NumVertsWide - 1; x++ )

{

if( !IsValidCoord( x, z ) || !IsValidCoord( x+1, z ) || !IsValidCoord( x, z+1 ) || !IsValidCoord( x+1, z+1 ) )

{

normalIndex += 6;

continue;

}

// Set/Compute the normal for triangle 1

float height0 = GetHeight( x, z );

float height1 = GetHeight( x, z+1 );

float height2 = GetHeight( x+1, z );

D3DXVECTOR3 normal1( height0 - height2, 1.0f, height0 - height1 );

D3DXVec3Normalize( &normal1, &normal1 );

// Get the Magnitude of the normal to the surface and the weights

float normal1Mag = D3DXVec3Length( &normal1 );

float abMag = sqrt( (height1 - height0) * (height1 - height0) + 1 );

float acMag = sqrt( (height2 - height0) * (height2 - height0) + 1 );

float bcMag = sqrt( (height2 - height1) * (height2 - height1) + 2 );

float theta0 = asin( normal1Mag / ( abMag * acMag ) );

float theta1 = asin( normal1Mag / ( bcMag * abMag ) );

float theta2 = asin( normal1Mag / ( bcMag * acMag ) );

m_pWeightedSurfaceNormals[normalIndex++] = normal1 * theta0;

m_pWeightedSurfaceNormals[normalIndex++] = normal1 * theta1;

m_pWeightedSurfaceNormals[normalIndex++] = normal1 * theta2;

// Set/Compute the normal for triangle 2

height0 = height2;

height2 = GetHeight( x+1, z+1 );

D3DXVECTOR3 normal2( height1 - height2, 1.0f, height0 - height2 );

D3DXVec3Normalize( &normal2, &normal2 );

// Get the Magnitude of the normal to the surface and the weights

float normal2Mag = D3DXVec3Length( &normal2 );

abMag = sqrt( (height1 - height0) * (height1 - height0) + 2 );

acMag = sqrt( (height2 - height0) * (height2 - height0) + 1 );

bcMag = sqrt( (height2 - height1) * (height2 - height1) + 1 );

theta0 = asin( normal2Mag / ( abMag * acMag ) );

theta1 = asin( normal2Mag / ( bcMag * abMag ) );

theta2 = asin( normal2Mag / ( bcMag * acMag ) );

m_pWeightedSurfaceNormals[normalIndex++] = normal2 * theta0;

m_pWeightedSurfaceNormals[normalIndex++] = normal2 * theta1;

m_pWeightedSurfaceNormals[normalIndex++] = normal2 * theta2;

}

}

// end surface normal calculations

}

Analysis of the performance of each approach

During the course of writing this article I decided to create a demo which illustrated the different topics in action. As I was researching the best way to implement the various heightfield algorithms I came upon some contradictions. Some people said you should normalize the surface normals before using them in any vertex normal calculation, some said it didn't matter. Also, many said the best way to compute the vertex normal was to average the surface normals, and others said just add up the surface normals and then normalize the resulting vector. Finally, there's the question of which algorithm looks best and performs best between Mean Weighed Equally and Mean Weighted by Angle. This section will attempt to compare and contrast the differences between the various methods.

It should be understood that the performance analysis I give below is based upon only have a single heightfield in the scene, with no other objects, which is animating on a Sine curve every frame. Actual performance in real-time situations could be both better and worse than those given below based upon whether your terrain is static or dynamic, and what else you have going on in your scenes. The information provided below is strictly for the purpose of comparing computational complexity of the different algorithms.

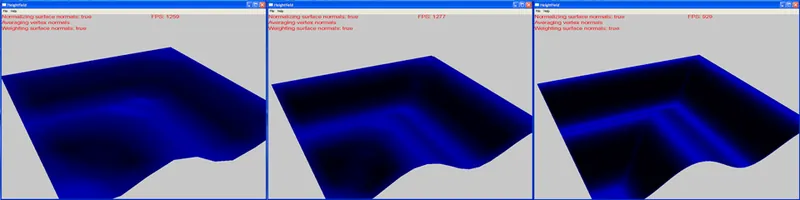

Img. High resolution terrain rendered without and with surface normals

MWE, Non-Normalized Surface Normals vs. Normalized Surfaces (Averaged Vertex Normals)

From a visual perspective I found that normalizing the surface does result in both darker looking terrain as well as smoother curves. Additionally, at relatively low resolutions there is no observable performance penalty for normalizing the surface normals. At very high resolutions, however, I found that normalizing the surfaces could lower the frame rate by as much as 6% (318 fps – 300 fps). The larger my resolution the more obvious the difference between normalized and non-normalized surface normals. With that being said, I found that normalizing the vertex normals with MWE can eliminate the need to normalize the surface normals.

Img. High resolution terrain rendered with averaging and normalizing of vertex normals with surface normals normalized. Very little difference.

MWE, Normalizing vs. Averaging of Vertex Normals

From a visual perspective, normalizing the vertex normals only really matters when the surfaces are not normalized. In either event normalizing the vertex normals has the effect of making the terrain look a bit lighter and spreads out the shadows. This difference however is most noticeable when viewed with the surface normals not normalized. Especially at higher resolutions if the surface normals were not normalized the vertex normals must be normalized. As with normalizing the surface normals, there is almost no noticeable performance penalty at lower resolutions for normalizing the vertex normals. At higher resolutions however, normalizing the vertex normals can drop the frame rate by an additional 6%. Given that normalizing both the surface and vertex normals incurs a 6% stackable framerate loss, and given the relatively little benefit gained from normalizing the vertex normals when the surface normals are averaged, my advice is to just normalize the surface normals and average the vertex normals. This will be doubly true when we get to MWA.

MWA, Non-Normalized Surface Normals vs. Normalized Surfaces (Averaged Vertex Normals)

Whereas normalizing the surfaces was optional for MWE, it is essential for MWA. When I render the weighted vertex normals without first normalizing the surfaces I get strange artifacts in the triangles which are perfectly flat. They either show up entirely black or entirely white. On the positive side, the amount of computation required to compute the angles far outweighs that which is required for normalizing the vectors. Always normalize the surface normals when using weighted vertex normals.

Img. High resolution terrain rendered with weighted surface normals and either normalized or averaged vertex normals.

MWA, Normalizing vs. Averaging of Vertex Normals (Normalized Surfaces)

This switch is probably the most interesting of all the other variables thus far. At lower resolutions the increased light caused by normalizing the vertex normals as opposed to averaging can make the terrain look more attractive. On the other hand, as the resolution of the terrain increases the extra light makes the terrain look unrealistic. A high resolution terrain with surface normals normalized and vertex normals averaged was the most realistic looking terrain I have yet to see. At the highest resolution the ripples in my terrain made it look like fine satin. The only downside is that the computation time for the weighted normals is quite extensive. In my experiences weighting the normals dropped my framerate from about 80% (1000 fps – 200 fps). The thing to keep in mind is that I was updating the height values and recomputing the normals every frame. In reality, there is likely to be very little run-time updating of weighted terrain normals. For that reason, weighted normals should still be considered a very viable solution when seeking very attractive and believable terrain.

Future research / other areas of interest

In addition to the topics I have covered in this article, I was asked to cover two additional areas of interest. Unfortunately, I did not have time to fully explore these topics. I list them here so the reader is aware of them and can do their own research if interested.

Smoothing Groups

Smoothing groups are most familiar to users of applications such as 3D Studio Max and other Modeling Packages. Smoothing groups are essentially sub-sections of vertices with shading which does not obey the same lighting rules as their surrounding vertices. This is useful in the case of a cylinder, for example. The cylindrical section of a cylinder is smooth and should thus have a smooth shading algorithm applied to it. The top however, or rather the transition from smooth edge to top, should not necessarily be a smooth transition. The user expects that the ring separating the edge and top is a hard edge and should thus not have averaged normals at those vertices. Smoothing groups allows you to do this by duplicating vertices and then setting different normals for one set of vertices than the other.

In the case of heightfields, smoothing groups could be useful at the peaks of tall mountains or at the edges of cliffs and drop offs. Either of the algorithms above would cause a smooth curve down the steep drop. In reality, there are often sudden drops in which there is not a smooth curve. Smoothing groups can then be used to give your terrain more realistic edges.

Computation of Normals from Parametric Equations

Another option is the computation of normals from some parametric equation. The best example of this would be a sphere. Normally a sphere is represented by an array of triangles which approximate the surface of a sphere. To compute the normal of any of those vertices one could use the usual method of crossing edges of a triangle. On the other hand, mathematically the normal to any point on the surface of a sphere is just the direction vector from the center of the sphere to that point on the surface. With this in mind, it is possible to compute a faster and/or more accurate surface normal on a sphere by using the mathematical definition of a sphere than computing normals via cross-product.

The application of this on a heightfield is less obvious. I can envision this only being useful when your terrain is procedurally generated from a well known function. In the demo I provided the positions of all the vertices are computed from a Sine function. In this example, it should be possible, knowing that the height of my vertices range from 0 to 1 as a function of 0 to 360 degrees, to compute the normal at each of the vertices based on the angle between 0 and 360 of that particular vertex. This will be left up to the reader to implement.

Conclusion

During the past two weeks I have had the opportunity to write a demo which explores the implementation of lighting normals of a heightfield while at the same time being able to make empirical observations about the usefulness and performance of each of the different popular algorithms. This article contains all that I have discovered about computing normals for lighting of a heightfield and I hope that it has been both informative and helpful. Based on my findings I will implement my heightfields using normalized, weighted surfaces, and averaged - not normalized, vertex normals whenever possible. For those that are part of the GameDev.net community I hope to see you on the forums and I look forward to your feedback regarding this article. Feel free to send your code corrections and/or comments directly to me via PM.

Appendix A: Demo Description, Links, and Key bindings

The screen shots, performance comparisons, code snippets, etc. of this article were all based upon information gathered from the attached demo. The demo was an opportunity for me to test various types of functionality and to determine the visual and performance impacts of those features. Because of that, the demo has a limited amount of functionality.

References

- "A Comparison of Algorithms for Vertex Normal Computation", Shuangshuang Jin, Robert R. Lewis, David West, 2003, Washington State University