- Download demo - 2.67 KB

License: Ms-PLIntroduction

.NET 4 brings a powerful Task library to support a piece of code to run in parallel processors. It just simply spawns threads into multiple processes using the newly written Task libraries (System.Threading.Tasks) in Mscorlib 4.0. Task libraries contain methods like For, ForEach, and Invoke to support parallelism in .NET languages, which I will discuss in detail later on.

Let's see what's got changed in .NET 4.0 to bring up parallel extensions.CLR Thread Pool 4.0

The old thread pool of .NET 2.0 used to support only 1-4 processors, where the "single queue", "single lock" schema was sufficient. The improved thread pool in .NET 4.0 can hold up to 16-256 processors.- The new ThreadPool significantly reduces the synchronization overhead associated with pushing/pulling work items to/from the pool. While in previous versions, the ThreadPool queue was implemented as a linked list protected by a big lock, the new version of the queue is based on the new array-style, lock-free, GC-friendly ConcurrentQueue class.

- The CLR 4.0 thread pool also supports a local queue per task, and implements a work stealing algorithm that load balances the work amongst the queues.

- In the 4.0 version of the CLR thread pool, the 'thread injection and retirement algorithm' has changed. In cases where there are more running threads than CPUs, instead of creating new threads at the rate of 1 thread per 0.5 second, the new thread pool takes the liberty to 'hold back' threads (i.e., reduce the concurrency level) for a while.

MSCORLIB 4.0

System.Collections.Concurrent Namespace

The System.Collections.Concurrent namespace provides several thread-safe collection classes that should be used in place of the corresponding types in the System.Collections and System.Collections.Generic namespaces whenever multiple threads are accessing the collection concurrently.

System.Threading.Tasks Namespace

The System.Threading.Tasks namespace provides types that simplify the work of writing concurrent and asynchronous code. The main types are System.Threading.Tasks.Task, which represents an asynchronous operation that can be waited on and cancelled, and System.Threading.Tasks.Task(Of TResult), which is a task that can return a value. The Factory class provides static methods for creating and starting tasks, and the System.Threading.Tasks.TaskScheduler class provides the default thread scheduling infrastructure..NET 4 Cancellation Framework

A very interesting addition to .NET 4 is a set of new types that specifically assist with building cancellation-aware applications and libraries. The new types enable rich scenarios for convenient and safe cancellation, and help simplify situations that used to be be difficult and error-prone and non-composable.

Two new types form the basis of the framework: a CancellationToken is a struct that represents a 'potential request for cancellation'. This struct is passed into method calls as a parameter, and the method can poll on it or register a callback to be fired when cancellation is requested. A CancellationTokenSource is a class that provides the mechanism for initiating a cancellation request, and it has a Token property for obtaining an associated token. It would have been natural to combine these two classes into one, but this design allows the two key operations (initiating a cancellation request vs. observing and responding to cancellation) to be cleanly separated. In particular, methods that take only a CancellationToken can observe a cancellation request but cannot initiate one.How it Works

Now you have got enough familiarization of the changes in .NET to bring up code parallelization. Let's start with a simple entity of parallelization called Task in our case. The new System.Threading.Tasks.Task class takes care of thread allocation and synchronization; yeah, it's internally using threads, but in a very efficient way.

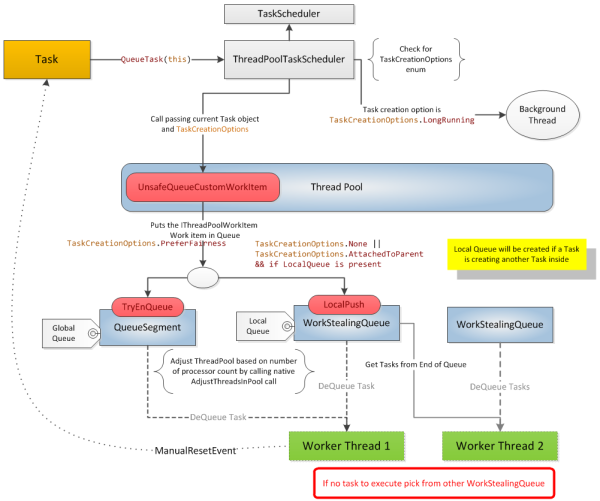

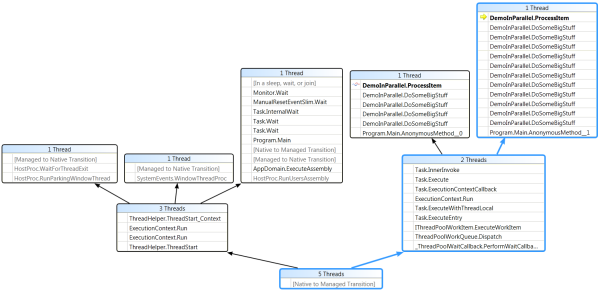

The Task class implements the IThreadPoolWorkItem interface, which is responsible for executing work items from the thread pool queue. The full flow of task parallelization is shown in the diagram below:

The ThreadPoolTaskScheduler class pushes Tasks (IThreadPoolWorkItem) to a global queue using the ThreadPool API. Worker threads picks up the tasks from Global Queue (QueueSegment). Once the processing is done, it informs the task by calling the manual reset event of System.Threading. If a task is created within a task, then it will not be pushed to the Global Queue, but instead maintained in a local queue (work stealing queue). Any task item in the work stealing queue can be shared by any other worker thread. How does work stealing work? The worker thread looks first into the local queue; if there is no work item to pick, then it searches the global queue. Still, if there is no work for the thread, it will look for any pending work item in other queues. So during the task processing, none of the threads are sitting idle, which actually gives 100% utilization of all cores of machines.

I think we have a lot of benefits of Local Queues and Work Stealing Queues, which were missing in the legacy thread pool, like less contention in the Global Queue, thread workers are effectively used, and many more. One thing to notice is that the huge performance improvement is also caused by changing the linked list based queues to array style queues; all the local and global queues are array based, which means there is no Big Lock for concurrency.

Let's hit Visual Studio now..

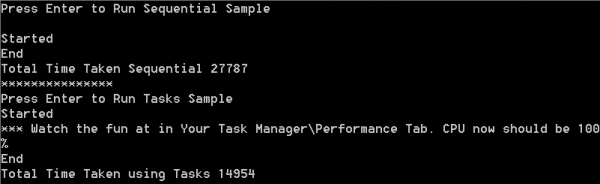

I ran the below sample of code in my dual core machine, better to try in a quad core.

//In Static void Main

DoSomeBigStuff(1, 10000);

DoSomeBigStuff(2, 10000);

//In some class

public void DoSomeBigStuff(int inp,int limit)

{

ProcessItem();

if (inp > limit)

{

return;

}

DoSomeBigStuff(++inp, limit);

}

public void ProcessItem()

{

Thread.SpinWait(100000);

}

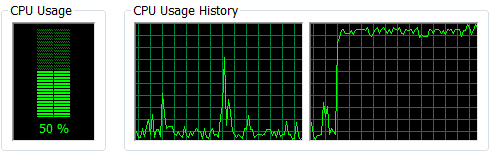

Running this on my dual core system gets the result in 8431ms, but if you check out the CPU performance, only 50% of the CPU is getting used.

Let's make some changes to the above code, and put DoSomeBigStuff in a task:

Task t1 = new Task(() =>

{

DoSomeBigStuff(1, 10000);

});

Task t2 = new Task(() =>

{

DoSomeBigStuff(2, 10000);

});

The CPU picture totally changes and now starts using both the cores. The code finishes in 4589 ms, which is a drastic improvement. Now I don't have to think about scaling up my code; just run the same code on an 8 proc machine and it will take lesser than 1000ms. The Task class encapsulates the logic of handling the number of threads based on processors and work item allocation.

There is some overhead associated with threading, so the individual tasks may take a little longer - in this case, they went from about 82 milliseconds for the single-threaded example to a wide range, some as high as 300 milliseconds, in the parallel.

This overhead should be part of your decision about when and what to parallelize in your application. What's more important for your application: that it finishes faster, or uses less total CPU time? Another consideration is ordering of the work.

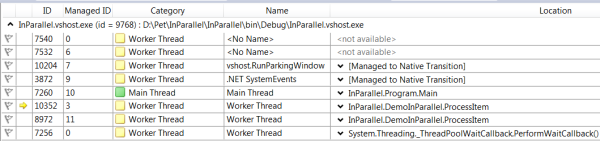

Let's look at the threads. Check the thread debug window; you can see two new threads spawned with the Main thread.

The Parallel Library has started two threads to do the work equal to the number of cores. One more thread got created, which handles the callbacks from the queue to the main thread.

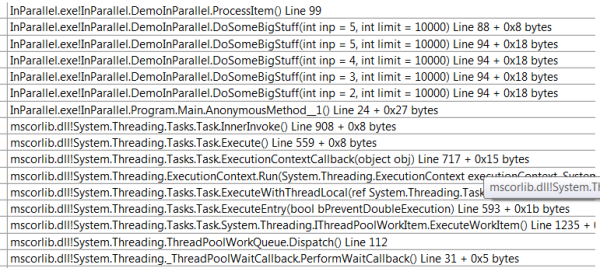

Check out the call stack of a single thread; it does the same stuff as we discussed above in the diagram.

You can figure out what the Execute method is doing; it just pushes the item into the global queue. The interesting method that I want to discuss here is ThreadPoolWorkQueue.Dispatch(). Let's go step by step in this method.

ThreadPoolGlobals.workQueue.MarkThreadRequestSatisfied();

This decrements the outstanding thread request, which means it gets the thread out of the pool.

ThreadPoolWorkQueueThreadLocals tl =

ThreadPoolGlobals.workQueue.EnsureCurrentThreadHasQueue();

This creates a Local Queue if not already created for the current thread worker.

ThreadPoolGlobals.workQueue.Dequeue(tl, out callback, out missedSteal);

This dequeues the task from the work queue. The Dequeue method is intelligent enough to pick the task if not present in the global or local queue; then it tries to steal the task from other queues.

callback.ExecuteWorkItem();

ThreadPool.NotifyWorkItemComplete();

ThreadPoolGlobals.workQueue.EnsureThreadRequested();

Execute the work item and notify the thread pool that the work is completed. If successful, it returns the thread back to the pool.

All this looks simple to me, but efficient logic to work out with threads. The picture below will give quite a good idea about the thread handling part done by the Task libraries.

What's Extra

The Task Parallel library provides one more class to play with, called Parallel. The APIs present in the Parallel class are used for iterations like For, ForEach, and Invoke. All these extension methods use the same Task libraries discussed above.

We will see one more example before proceeding ahead.

for (int i=0; i < 1000; i++){

Calculate(i);

}

public void Calculate(int pos){

double result = new Random().NextDouble();

for (int i = ; i < 20000; i++){

result += Math.Acos(new

Random().NextDouble());

}

}

Parallel.For(, 1000, delegate(int i)

{

Calculate(i);

}

);

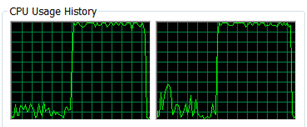

Completed in half of the time compared to the legacy For loop.

Parallel.For directly jumps into the ForWorker method with the following params in the Parallel class:

ForWorker<object>(fromInclusive, toExclusive,

s_defaultParallelOptions, body, null, null, null, null);

Internally, the ForWorker method creates an instance of ParallelForReplicatingTask which inherits from Task, with the default set to InternalTaskOptions.SelfReplicating. ForWorker creates an empty root task, and iteration tasks are created as child tasks of the root task.

rootTask.RunSynchronously(parallelOptions.EffectiveTaskScheduler);

This method starts the self-replication process of child tasks, and starts queuing them in the local queue. The Parallel class decides the number of thread workers which is equal to the number of processors.

In all methods of the Parallel class, it's been taken care that if any cancel task request comes, the threads will not be aborted, but cancelled softly.

While self replicating, .NET has to make sure the following points:- Self-replicating should have an inter-replica communication mechanism for communicating the completion of the overall activity.

- Only use when the cost of this communication and the management of partitions is considerably less than the potential benefit gained from parallelism.

- Do not assume that the task is always replicated. It is only replicated if there are available resources. For the same reason, do not assume that there will be a specific number of replicas.

- In some instances, the number of replicas could far exceed the number of cores in the machine.

- You may choose to use optimistic concurrency when it is possible to correctly deal with multiple executions of the same step.

And I think they have done a good job in handling the number of thread workers, using concurrency queues and the effective cancellation framework.

Here is the demo source code:

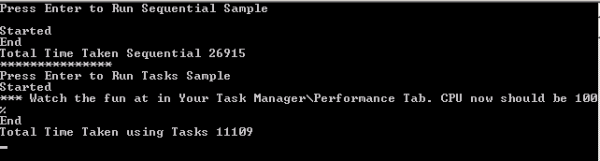

Below are the results after running the source on a dual core and quad core:

A Bonus Item

Visual Stuido Team Launched Concurrency Analyzer: If you have VS 2010, you can see the option under Analyze->Launch Performance Wizard->Concurrency.

It generates a full analysis report with graphs about the thread and cores usage. If building a thread intensive app, then you should go through this analysis. Also see the Concurrency Analyzer Video.

Good job done .NET Parallel Extension team!!License

This article was authored by Manabendra Roy (Manab) and reproduced for the benefit of our viewers under the terms of the Ms-PL license.

- Self-replicating should have an inter-replica communication mechanism for communicating the completion of the overall activity.

- The new ThreadPool significantly reduces the synchronization overhead associated with pushing/pulling work items to/from the pool. While in previous versions, the ThreadPool queue was implemented as a linked list protected by a big lock, the new version of the queue is based on the new array-style, lock-free, GC-friendly ConcurrentQueue class.

Advertisement

Recommended Tutorials

Advertisement

Other Tutorials by GameDev.net

Advertisement