Intention

This article is intended to give a brief look into the logistics of machine learning. Do not expect to become an expert on the field just by reading this. However, I hope that the article goes into just enough detail so that it sparks your interest in learning more about AI and how it can be applied to various fields such as games. Once you finish reading the article, I recommend looking at the resources posted below. If you have any questions, feel free to message me on Twitter @adityaXharsh.

How Neural Networks Work

Neural networks work by using a system of receiving inputs, sending outputs, and performing self-corrections based on the difference between the output and expected output, also known as the cost.

Neural networks are composed of neurons, which in turn compose layers, or collections of neurons. For example, there is an input layer and an output layer. In between the these two layers, there are layers known as hidden layers. These layers allow for more complex and nuanced behavior by the neural network. A neural network can be thought of as a multi-tier cake: the first tier of the cake represents the input, the tiers in between, or lack thereof, represent the hidden layers, and the last tier represents the output.

The two mechanisms of learning are Forward Propagation and Backward Propagation. Forward Propagation uses linear algebra for calculating what the activation of each neuron of the next layer should be, and then pushing, or propagating, those values forward. Backward Propagation uses calculus to determine what values in the network need to be changed in order to bring the output closer to the expected output.

Forward Propagation

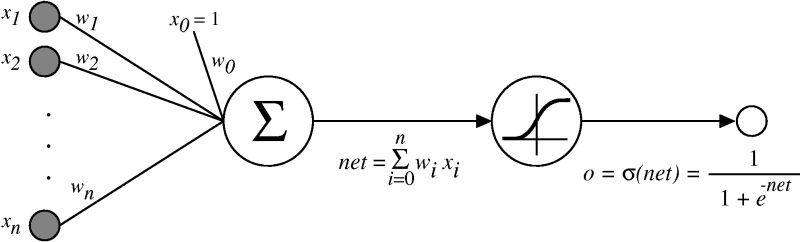

As can be seen from the gif above, each layer is composed of multiple neurons, and each neuron is connected to every other neuron of the following and previous layer, save for the input and output layers since they are not surrounding by layers from both sides.

To put it simply, a neural network represents a collection of activations, weights, and biases. They can be defined as:

- Activation: A value representing how strongly a neuron is firing.

- Weight: How strong the connection is between two neurons. Affects how much of the activation is propagated onto the next layer.

- Bias: A minimum threshold for whether or not the current neuron's activation and weight should affect the next neuron's activation.

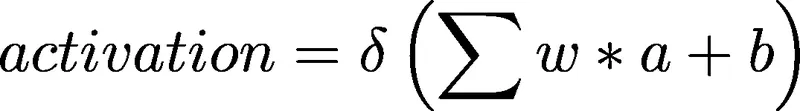

Each neuron has an activation and a bias. Every connection to every neuron is represented as a weight. The activations, weights, biases, and connections can be represented using matrices. Activations are calculated using this formula:

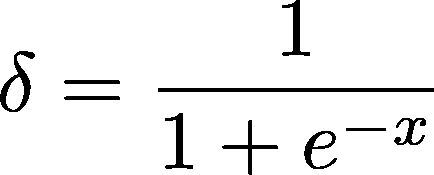

After the inner portion of the function has been computed, the resulting matrix gets pumped into a special function known as the Sigmoid Function. The sigmoid is defined as:

The sigmoid function is handy since its output is locked between a range of zero and one. This process is repeated until the activations of the output neurons have been calculated.

Backward Propagation

The process of a neural network performing self-correction is referred to as Backward Propagation or backprop. This article will not go into detail about backprop since it can be a confusing topic. To summarize, the algorithm uses a technique in calculus known as Gradient Descent. Given a plane in an infinite number of dimensions, the direction of change that minimizes the error must be found. The goal of using gradient descent is to modify the weights and biases such that the error in the network approaches zero.

Furthermore, you can find the cost, or error, of a network using this formula:

Unlike forward propagation, which is done from input to output, backward propagation goes from output to input. For every activation, find the error in that neuron, how much of a role it played in the error of the output, and adjust accordingly. This technique uses concepts such as the chain rule, partial derivatives, and multi-variate calculus; therefore, it's a good idea to brush up on one's calculus skills.

High Level Algorithm

- Initialize matrices for weights and biases for all layers to a random decimal number between -1 and 1.

- Propagate input through the network.

- Compare output with the expected output.

- Backwards propagate the correction back into the network.

- Repeat this for N number of training samples.

Source Code

If you're interested in looking into the guts of a neural network, check out AI Chan! It's a simple to integrate library for machine learning I wrote in C++. Feel free to learn from it and use it in your own projects.

https://bitbucket.org/mrsaturnsan/aichan/

Resources

http://neuralnetworksanddeeplearning.com/

https://www.youtube.com/channel/UCWN3xxRkmTPmbKwht9FuE5A