Introduction

The Game Convention Developer Conference (GCDC, August 20-22) in Leipzig is not just another game development related conference. Its proximity with the Game Conference trade fair and show makes it a great conference, as game developers come in huge numbers from all around the world to showcase their games and to find new business partners. This year was no exception, with the coming of Bruce Shelley (Ensemble Studio), Don L. Daglow (Stromfront) and many more (the only downside being the non-availability of Peter Molyneux who was not able to come this year).

As an incentive to keep you reading this long article, I kept the better part for the end. But don't cheat by jumping directly to the last page - I believe that there are good things in every talk and session I report here and you'll miss a lot by going straight to the conclusion.

Ed Note: All audio podcasts are removed from this version of the article. To view the article with audio streams, please use the featured version

In This Article

Practical multi-threading for game performance

Better Games through Usability

Procedural Texture Generation Theory and Practice

What makes games Next Gen?

Interactive ray tracing in games

Designing by Playing

Engine Panel

Post Mortem on Titan Quest

The Game Convention

Practical multi-threading for game performance

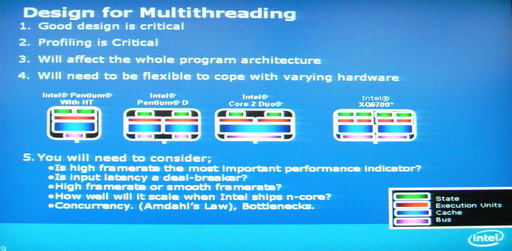

Leigh Davis (Intel) gave us a great presentation about what one should consider when writing multithreaded games. He was seconded by Doug Binks (Crytek) who explained to us how multithreading is used in Crytek's CryEngine2.

In the coming years, Intel is going to push its multicore architecture to deliver even more performances: the Penryn (Core 2 Duo at 45 nm) is to come this year and next year will see the release of the Nehalem CPU - 4 cores with 8 hardware threads. In the recent years the company delivered to us many interesting technologies, from the first hyperthreaded processors to the more recent Core 2 Duo. The obvious goal of this new architecture is to make more powerful processors and to bypass the current limitation of the processor building technologies: there are limits to miniaturization and to high frequency handling. We all know the reasons why Intel, AMD, IBM or other founders are releasing multicore processors: they scale far better than monocore processors when it comes to improving their performance.

But if you want to improve your game performances, you have to choose the right software architecture - and this is where programmers are in need for tips and education from the various chipmakers.

If you want to take advantage of the most recent processor architecture, your software has to be designed accordingly:

- You have to have a good understanding of how processor cache is handled in order to efficiently use it. This is especially true on multicore processors where all cores don't share the same cache: if one core is modifying a data set and the other core is reading from it, both cache need to be synchronized and you lose much performance.

- While speaking with the graphic driver, avoid calls that stall - for example, calls that return the GPU state.

- You also need to have a firm grip on how your OS is handling threading. While it is sometimes handy to assign a particular thread to a core (for example, to avoid cache issues), one should be very careful about this.

- A better scheduling of the operation that takes place in your game will lead to better performances. You should try to not let a core remain idling waiting for another thread. Ideally, synchronization between thread should not take any time at all. Also, consider dependencies between operations.

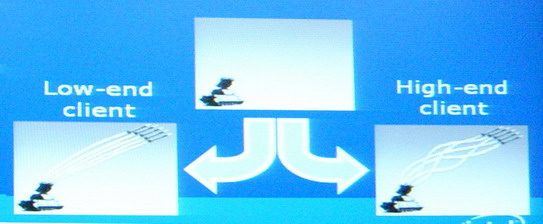

- Better animation - adding cloth or hair simulation can add a lot of eye candy

- The environment can be made "more destructible" - on low-end systems, the environment is static and invincible; on high-end systems, more physics can allow the environment to be fully destructible.

- More complex particle systems - additional power can be used to initiate more complex behavior for particle systems

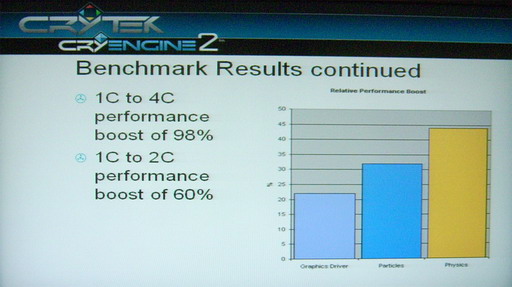

Crytek's CryEngine2 makes use of both aspects: on the software design side, it takes the new architecture into consideration to improve the general engine performances. On the CPU use side, it makes use of the additional CPU time to implement better effects.

For example, CryEngine2 implements task level parallelism over these 6 key areas (not counting, of course, the functional decomposition of threads):

- File streaming

- Audio

- Network

- Shader compilation

- Physics

- Particle systems

Performance improvement from one core to two and four cores

(Intel Core 2 Duo Quad Core + NVIDIA 8800 Ultra)

Disabling extra cores creates one core and two core setups.

The conclusion is clear: a clever design and the respect of the peculiarity of multicore processors can have a tremendous effect on performance. Taking these points into account will allow your software to scale with the future n-cores processors that will hit the market in the coming years.

Better Games through Usability

Mario Wynards (from Sidhe Interactive) is a guy who is passionate about usability. And that's probably a good thing because one cannot imagine a game to be fun if one cannot understand how the game is working. In his talk, Mario explained us how Sidhe Interactive handle this issue in order to improve the game interface and general usability.

Clearly, there is a need for a specific process: programmers are bad at spotting usability problems in their code - and there is a good reason for this: if you program something, you know how it works, so when testing you don't see any difficulty in making it to work in the way you want it to work. Everything looks obvious to you because you know the internals of your game. But the average gamer is not part of the game creation process. He does not write the rules - he has to follow the ones you dictated. And of course there is a chance that the rules you wrote are a lot less obvious that what you think. To solve this problem, Sidhe Interactive implemented a process where test plays an important role.

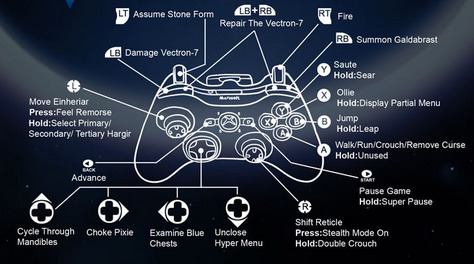

Usability, by www.penny-arcade.com

What is usability anyway? Mario is using the Wikipedia definition here: "Usability is a term used to denote the ease with which people can employ a particular tool or other human-made object in order to achieve a particular goal". Here, we are speaking about driving the player into knowing what his objectives are and how he has to play in order to achieve his goals in the game. Obviously, there is a tremendous need for data - as the programmers are not the one that can decide whether a particular task his easy or not. To obtain such data, Sidhe Interactive is using gamers from the outside. Those gamers are filmed while playing (most of the time, only a small part of the game is playtested) and the gamers are asked to give their opinion on the usability. It is important to note that players are not testing the gameplay (which is part of the game anyway) neither they are testing the game quality (that's what QA is here for), they are testing general usability; they are answering questions like: "is it easy to understand how the game control works", "were you able to understand the purpose of the game", "was the inventory system efficient or not" and so on. The filming is there to record the players' reactions in real time - because the face of a frustrated gamer is quite easy to spot.

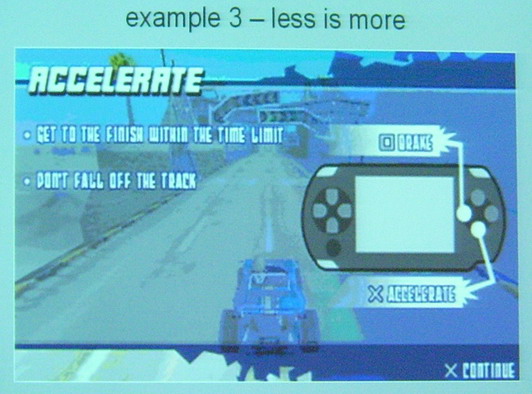

Sidhe Interactive has used this process for month now, and they successfully applied them to their latest games, including Grip Shift. As a result, the usability team has discovered a few key findings:

- Players don't like to read - so you'd better avoid long text; Mario gave us a more twists of this: Grip Shift allows the player to build his own tracks. When testing the track editor on PSP, they faced many cases where the player asked for help ("how can I do this or that"). At the end of the sessions, these players were asked what should be added: they all wanted some help text. The thing is that help was already displayed on the main user interface, and people just didn't want read it - they did not recognize the text on their screen as help. By removing this text and adding a help button, Sidhe Interactive solved the problem.

- Goals have to be obvious - unless you want your player to wander in the game world with no idea on what he should do.

- When you display a text and an image, people assume that they both represent the same thing - so you'd want to make clear that they don't represent the same thing (for example: the mission description and the controller setup) by using a clear separation (for example, two consecutive screens)

Testing usability by using external gamers is not very difficult to implement in any studio. All you need is a dedicated room, a webcam, a pool of players (your local game retailer will be a good place to hunt them down: a lot of players will be more than happy to test your game, especially if you reward them at then end), some time to analyze the collected data and the will to have a real use of this process. There is no need to set up usability testing if you don't want to force your programmers to accept the results you get (and this is where videos might help: saying to a developer "this part is frustrating for most players" when he actually finds it easy to deal with is not going to work; showing the real gamers' reactions might help a lot). The good thing is that you don't even need to spend all your time waiting for new testers, as a few of them will already spot a large part of your usability problems.

Procedural Texture Generation Theory and Practice

We have already talked about procedural texture creation recently (at the FMX'07, Allegorithmic's Sebastien Deguy presented MaPZone and ProFX, and we recently featured a MaPZone tutorial here on gamedev.net). Dierk Chaos Ohlerich of the German company ".theprodukt" GmbH presented their own solution to the problem.

You might not know .theprodukt. It's enough to say that they come from the demo scene where they literally blew everyone's mind by releasing their first public demo, fr-08: .the .product, under the team name farbrausch. Later, they released the 96kb FPS game .kkrieger - that's it: a whole 3D environment complete with textures and models in 96kb. More recently, they were given some attention after that Will Wright cited them during a talk about Spore. .theprodukt now sells their solution as a middleware made in two parts:

- A free texture creation tool (.werkkzeug3 Texture Edition)

- A non-free Texture Generation Library that reconstruct the texture in your application (if you are on a budget, .werkkzeug3 can export the texture as a bitmap - you'll lose the benefits of using procedural textures but you'll still be able to create them and use them in your game).

Dierk Ohlerich of .theprodukt is one of those who are responsible for the appearance of that technology. He presented us the reasons why everyone should consider procedural texture generation for game development:

- Procedural textures are memory friendly - and of course bandwidth friendly.

- The have a lot of cool side effects - ranging from resolution independence to non linear editing

The problem is that even if we know how to make a procedural texture, we still need a way to combine all these operators (functional and full image) in a user friendly way. The first farbrausch production used a scripting system - but that's hardly an effective way for creating complex textures. It became quite clear to .theprodukt team that a GUI tool was needed to cut development time. A texture is then described using a directed graph that groups operators together.

Dierk then described some implementation choices that .theprodukt had to make in order to create their middleware.

- Of course, as soon as you're dealing with randomness and floating point math, you are going to face reproducibility issues: different hardware might lead to different results.

- The texture creation process can be heavily parallelized because of its very nature: large parts of the tree branches in the description graph are independent from other branches and consequently can be processed in parallel.

.theprodukt released the slides of the talk at

http://theprodukkt.com/downloads/slides_procTextures.pdf

What makes games Next Gen?

Don L. Daglow (president and CEO of Stormfront) is a veteran veteran (yes, that's the same word twice) - the guy that has been around the corner since the very beginning of our game industry. He has the kind of experience and fame in the industry that we all want to get. He made games for machines that you now find in museums - but that doesn't mean that his views on our gaming world are outdated. In fact, it's quite the contrary: I was told that he is the one who coined the term "Next Gen" (and frankly, I believe this) and he is probably one of the only people in the 6 Realms (well, at least on Earth) that have more than a clue about what makes a game next gen.

And Mr. Daglow first began his talk by explaining what happen when so-called "next-gen" hardware first hit the market. Every time a new hardware is introduced to the market, these steps repeat again and again.

- Exclusive titles are developed to show the power of the hardware; those exclusive titles get extra attention and extra money, even if games for the older platform outsell them in the end (for example, PS2 games are still sold a lot but Sony is making more buzz around PS3 games; the obvious goal is to sell the new console).

- "Teams are learning new machines". Even experienced teams are facing the frustrating period of moving to new hardware, new architecture and ultimately to face new challenges. This learning time typically lasts for one or two games - the better hardware knowledge typically leads to better games. Don Daglow gave the example of the Amiga - when it hit the market it introduced the idea of bit-planes, which was completely new at this time.

- The first intended audience is made of hardcore players - they are the ones who for years have been standing in front of stores for days or weeks at a time to get the first batch of new hardware. As Don Daglow said, "They are the people for who this is not entertainment. This is Religion". So what games must be produced at the beginning of a new cycle? Of course: games for hardcore players. But at the same time, these players want new things - which is a bit contradictory because hardcore players don't like new things: they want to play the same game over and over again. In fact, despite their claim, they don't like change that much. "The rule book of what you must do for a hardcore gamer is 200 pages long. That is about doing the same and not necessarily doing things differently."

- As a consequence, journalists focus on that hardcore audience - because if they don't, the game magazines don't sell, and there is no need for journalists. But game reviews typically begin by something along the line of: with this totally new and incredible hardware we were promised a brand new experience - but all we get is more of the same.

What is true is that the availability of a new hardware is a great time to experiment with new ideas in the first place.

Later in the cycle, the installed base has grown. The prior generation is not used as much as it was earlier in the cycle - the price for these games lowers, and it's not interesting anymore for publishers and developers to make new games for new systems. Teams are now more experienced, hardcore gamers are now representing a few percent of the audience - but they are still influent: they are now opinion leaders. This is the beginning of the mature cycle.

Fast forward in time: prior gen is really fading away. The new hardware install base is enormous. There are now three types of player: the hardcore player, who wants a specific game. The a-bit-more-than-casual player, who wants a specific kind of game but is not really aware of every other title. But the gamer type that proliferates later in the cycle is not necessarily aware of the possibilities of the platform and are not even interested in these possibilities. This is the time for high profile licensed games that will appeal an audience based on the franchise they are using.

After having discussed next-gen hardware, Don Daglow spoke about next-gen software, beginning with Sim City: when Will Wright tried to explain it to publisher he was turned down because "this is not a game!". Ultimately, someone foresaw the potential of Sim City - and he was right. In a sense, Sim City was truly a next-gen.

And consider Guitar Hero. A truism of software publishing is that you can't sell expensive hardware with a game; another one is that every box on the shelf should be the same size. Guitar Hero managed to make these two truisms wrong (guitar controller, huge game box to package it). But the real novelty with Guitar Hero is the fact that instead of saying "I played Oblivion all weekend", people now say "We played Guitar Hero".

Geometry Wars showed that "all games could be new again". Old-schooled graphics defies the notion of next-gen but the success of Geometry Wars is a proof that "old things can be new again". Is it next-gen?

World of Warcraft? As Don Daglow asked, "Does it make it next-gen if you prove that a massive audience exists for something that you might not have known existed before?". When he designed his first MMO back in '89 his design document stated that they would be lucky if they got 35 people on their server. They got 50 - and felt very strong.

He also made a parallel with how emotions are handled in movies - this is not clear whether they now come from what is filmed or from the special effects or post-production. Emotions begin to make their way into the game industry. Does a game that plays with the player's emotions considered as next-gen?

Two years ago, Don Daglow was asked: what makes a machine next-gen? He cynically answered that what makes a machine next-gen is mainly the marketing budget and the commitment of the marketing crew that describe it as a next-gen machine. His opinion is now that a next-gen platform is a platform that offers the potential to dramatically change the player's view and experience of what interactive entertainment is. According to him and under that definition, the Wii is a next-gen platform.

And the same goes for software: if a game is changing the way people see games, then this game is next-gen. The difference is that you can know what will be the next hardware revolution - but you can't foresee any drastic changes in software: you can only witness that something has changed.

Interactive ray tracing in games

When I read this session title, my first though was: "What? Ray tracing in games? No chance to see that during my whole lifetime!". When I got out, my first though was: "poor NVidia... poor ATI...". Of course, I'm exaggerating a bit: NVIDIA and ATI are still going to sell GPUs for a few years. But the fact is that ray-tracing is very likely to become an alternative rendering method for games - one that works well, and fast.

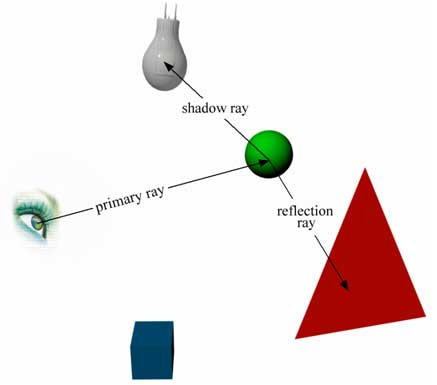

This talk was given by Daniel Pohl (Intel), who was already responsible for the Quake 3: raytraced and Quake 4: raytraced projects. His goal was to give us a tutorial about ray tracing and how it can be used in games. Instead of going through his entire tutorial, I will briefly summarize some base principles of ray tracing.

- A ray is cast from the camera to a scene object A

- If A is reflective, the ray is bounced and continues its way in space to an object B.

- If A is refractive, the course of the ray is modified.

- A shadow ray is cast to the light source to test if the collision point on A is lit.

Ray tracing basics

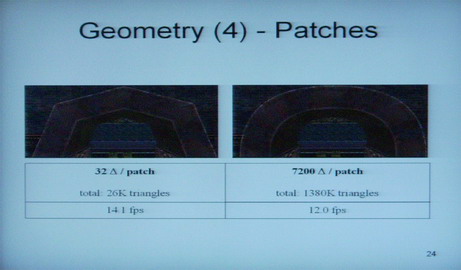

As you understand, the only thing you'll need to be able in order to implement a ray tracing algorithm is a ray/object collision algorithm. But using only this naive approach, you'll be stuck with a "era-time" ray tracer - and certainly not a real time one. Part of the hard work to make it faster is to use proper acceleration structures. Daniel based his work on OpenRT, which stores the geometry in a BSP tree. As he said later in his talk, this has some incidence when it comes to using dynamic geometry: moving a single triangle can lead to the reconstruction of a large part of the tree - and such situations are not practical at all. This problem required some hacks to be handled correctly.

But despite this, ray tracing has many great features that traditional rasterization is missing:

- In rasterization, the influence of geometry is linear: if you have 3 times more triangles to handle, you'll need 3 times more time. Using ray tracing, a linear growth in geometry will only have a logarithmic impact on the process. As a consequence, one can safely subdivide patches or surfaces (for example to implement displacement mapping) at a fraction of the cost that such subdivision would cost.

- Pixel-perfect shadows are essentially free, and realistic soft shadows only require more rays to cast. The number of light sources has no influence at all.

- Per-pixel illumination is the norm, no matter what the light source type is. You can even implement a textured light source (the effect would then be similar to the one that was featured in the first Unreal 3 Techdemo).

- Shader effects can be implemented very easily (Daniel gave the example of texture coordinate manipulation, camera portals or ground fog).

- Reflection and refraction are physically correct. In a rasterizer, reflection is implemented with textures and this technique is subject to many limitations (scene complexity might prohibit a correct implementation). Using ray tracing, reflection and refraction are natural - as it mimics the real world. Multiple reflections are also very easily implemented.

Daniel also discussed a fundamental feature of any 3D game: collision detection. Collision detection between a moving object A and another object (be it static or moving) can be implemented in a ray tracer by casting multiple rays from A. If the ray hit a surface, we get (for free) the hit position, the normal at this position and the distance between the colliders. The cost of collision detection in a ray tracer is very low: only a few rays have to be used for each moving object - and that number has to be compared to the number of primary rays that are cast to render the frame (for a 1024x1024 image, at least 2^20 rays must be computed - not to mention anti-aliasing and special effects such as shadows and reflection). Again, the number of triangles in the scene only has a logarithmic influence on the collision detection.

Many special collision detection cases can be handled in a very elegant way: for example, the case of transparent textures. While a rasterizer based engine might have some difficulties to handle partially transparent surfaces (for example a grid), a ray tracer deals with them very naturally.

Real time ray tracing is still not ready for games - there are still some heavy performance issues, but as newer computer architectures (especially n-core processors) are more widespread, one can begin to consider ray tracing as a viable alternative to rasterizing for games. Of course, ray tracing can be heavily parallelized - that would explain Intel's interest for this research.

Designing by Playing

This talk is to compare with the "Better game through usability" talk, as the process is roughly similar. However, there is a big difference: while usability testing focuses on making the interface and general usability better, design by playing focuses on improving the player's experience from a gameplay point of view.

As explained by Bruce Shelley (Ensemble Studio), designing games by playing them is a process defined by "playing a lot in order to improve different aspects of your game". This process has been applied successfully by Ensemble Studios since its inception, and continues to be used today to design their new games. To implement Design by Playing, you don't need many resources: what you need is a team of game developers who love to play games. The process is then a succession of rapidly handled phases:

- Implement a new feature.

- Test to see if the feature is fun to play with.

- If yes, keep it - if no, remove it.

The result of such game design process is - by the experience of Ensemble Studio - well balanced games that appeal to a broad audience. Hardcore gamers find something to feed them while casual gamers are not turned away by the complexity of the game: everyone can have fun playing the game.

Of course, there are some drawbacks. The first one is that you can't design by playing without a first game design - and of course a first implementation of this design. You need something to initiate the whole process.

Next, it's impossible to design by playing without making numerous different builds of the game. Over the course of 3 years, Ensemble Studio generated more than 4,000 builds of their game "Age of Empire 3" - that's more than 5 builds per day. So the first thing to do if you want to apply the same process is to make sure that your build process is efficient - if you need half a day to build a new version of your game, you're screwed.

There is also a big problem related to the game design document: with that huge number of iterations, experiments and builds, it's impossible to keep them up to date. Ensemble Studios used a very "agile" process to track ideas that worked and those that didn't: basically, a sheet of paper (well, many sheets of paper).

But the biggest drawback is related to the project management itself: you just never know what will be implemented next, and as a consequence you'll have a hard time to estimate the time you'll need to finish your game. It's also very difficult to manage a milestone-based deal - because you just don't know what you're going to push in your game for the next milestones. If you're an independent developer that doesn't rely on a publisher to sell the game, that can be fine. Otherwise, this can prove to be quite difficult.

Strangely enough, feature creep is not exactly a problem: if you don't have the time to add a neat feature before shipping, just don't add it. Bruce Shelley mentioned that near the end of the development of Age of Empire 1 they brainstormed to find new ideas. The result of this brainstorm was a multi-pages document where every feature was supposed to be implemented in the game. In the end, only half a page of these found their way into the game - and most of the unused ideas were used as a base to create Age of Empire 2.

Engine Panel

The Engine Panel was moderated by Goetz Klingelhoefer (Krawall Gaming Network) and featured key representatives from major middleware users and makers:

- Bruce Rogers from Cryptic Studio - Cryptic doesn't license their engine but they have built their own internal middleware over the years.

- Doug Binks, from Crytek, makers of the CryEngine2

- John O'Neil of Vicious Cycle, makers of the Vicious Engine

- Mark Rein of Epic Games, makers of the Unreal 3 engine

After the panelist introduced themselves, Goetz Klingelhoefer first ask to the panelist what are the reasons to use a piece of middleware rather than create it in-house. John O'Neil answered (well, he first tried to get his microphone working; a bit of help from Mark Rein was needed to fix everything): "from a developer's perspective (...) you can really look at two things: developing technology and developing games. If you really want to focus on your core business you have to define what your business is. Is it building technology (...) or is it building games? So the more time your are focusing on game content (...) the better you're going to be."

Mark Rein was then asked if using an engine was really a time saver - a question to which Mark answered that while you may not save time you enable yourself to use that time to create a better game: "Instead of creating a renderer, a particle system or a scripting system you can create a RPG system or a dialogue system". Ultimately, it depends on where you want to put your money - and "that's a game by game decision".

At what time should the decision of using an engine be made? Doug Binks thinks, "it's got to be really early on." Publishers will probably want you to check if there exists any middleware that can help you to during development. "If you can use something available, then do that".

Goetz Klingelhoefer then asks if any panelist had to say to a potential customer "we're not going to license you our engine because it doesn't make sense" (from a technical point of view). This is a situation that Epic had to face once: Mark Rein explained that they thought that the game idea of their potential licensee was too weird to actually have any success - and ultimately, Epic proved to be wrong as the game went well. "We learned that (...) we don't have a better crystal ball than everybody out there (...) so we decided not to be the quality police." Of course, there are cases where the engine may not be a good technology fit. "The thing is that you don't want to be in a situation where the engine is not a good fit. That's not good for you and that's not good for them.". John O'Neil added that honesty is an important aspect in the engine business - if Vicious thinks that their engine will not be able to do what the game developer wants it to do, they don't hesitate to point him to other middleware solutions.

The moderator then wanted to know if the engine makers were incorporating their licensees' feedback in their product. Mark Rein's answer was: "we specifically don't do that. (...) The best way to make an engine (...) is to make our game. (...) If we try to put features in our engine that we are not going to use then we can never bring them to full, professional, ship-a-game quality. (...) We will not put your features in our engine."

What about developers who are reluctant in using engines? Mark Rein first notes that we are already all using middleware - DirectX or OpenGL are middleware. If the problem is about lacking the feeling of getting his hands in the dirty technology then there is no problem either: you still have to know your platform, and you still have to know how to code very advanced features. John O'Neil adds, "You still have to be able to test and debug everything".

Is there a real world example of a problem that can occur when using the panelist middleware? John O'Neil thinks that most of the problems they face are in fact internal problems: for example, while they already presented their PS3 and Xbox360 solution, they had to postpone the release of this version because they want to test it fully before making it available for general use. Doug Binks can't say much, as he has been with Crytek for a short period, but he already sees a lot of questiond from developers who want to use the engine without following the engine philosophy. He said "try to do it with the engine; (...) don't try to replicate the functionality that are lying out there until you really know that it's not going to do what you need to do."

Then the QA sessions began. Some interesting questions came out, including:

- Why are the engines marketed as general when the first party games are all FPSs? Answer: the engines themselves are general. FPSs are demonstrating the engine in a rather efficient way - mostly because FPSs are high-demand games.

- Why is there no RTS-specialized engine? Answer: because the companies who make these engines don't want you to use their technology.

- Is OpenGL dying? Answer: no! But that doesn't mean that OpenGL doesn't have any problems, being driven by a consortium that has only loose interest in making the beast evolve. As of today, OpenGL is lagging behind DirectX on the Windows platform - but on the other hand it's the only available API on all the other platforms (and the PS3 API is loosely based upon OpenGL; Mark Rein even asked for an OpenGL driver for the PS3 Linux distributions).

Post Mortem on Titan Quest

(report by Felix Kerger)Brian Sullivan, founder of Iron Lore, talked in his Post Mortem on Titan Quest about several aspects of the development of Titan Quest.

Very interesting was the part about founding Iron Lore and their problems finding a publisher for Titan Quest. In the first years Brain Sullivan and Paul Chieffo paid all expenses of Iron Lore with their own money, this was a high risk and got rather stressful for them, because they couldn't find a publisher. In the first year they created the concept of Titan Quest and tried to convince a publisher to finance the project. As no publisher was interested they started to develop a demo version of the game, hoping to find a publisher easier with it. But even with the demo they couldn't get a deal, so they continued to develop the demo and searched for a publisher at the same time. After one year THQ agreed to finance the project and Iron Lore started to hire new people. Before this there were only a handful of people developing Titan Quest, after the hiring there where about 30 people working on the game.

After this short introduction he talked about what went right during the development of Titan Quest. One import fact he mentioned was that the team was able to hold on to their vision of the game and work towards this. Very impressive was the presentation of their level editor that enables them to create complete worlds, draw rivers and much more. Because of this editor they only needed two people to create the world of Titan Quest. The editor is included in the game so the community can create new worlds on their own.

After the "What went right" section the "What went wrong" section came, no surprise there. Their biggest problem was that they had to hire more than 20 people at once; this made it difficult to pass on the vision of the game to the new members.

All in all was this talk was one of the best I attended at the GCDC and showed interesting and new aspects for game developers, especially if they plan to start their own company and have to deal with publishers and the finding of new team members.

The Game Convention

On the third day, I had the possibility to wander in the huge halls of the Game Convention - the primary reason for that was to try to date a booth babe find interesting people to talk with. Needless to say, that was not very hard - rooms were filled with bright guys and girls from here and there. So I grabbed my voice recorder (39.95EUR), my cheapy low-quality microphone (6.99EUR), and ran into the crowd to interview a few people (not sure about the cost here) and I feature the result on gamedev.net as a set of podcasts (priceless).

IBM: MMOG Hosting for Taikodon (Hoplon Infotainment)

IBM is now selling a hosting solution for your MMO, and the Brazilian company Hoplon Infotainment is using it to power its forthcoming massive social game, Taikodom. If you're an independent game developer with a vision (and some money), you have to check this podcast. If you are a hobbyist game developer with an interest in technology then you also have to check this podcast. And, well, if you are alive and if you're reading this then listening to this podcast is your duty.

Tarq??nio Teles, CEO of Hoplon Infotainment about IBM MMOG hosting solutions

Gamr7: procedural city creation

Gamr7 (for those who don't get it, it's "Gamer's Heaven") is a small French company that markets a unique solution to build realistic cities using procedural techniques. If you want to create a new city on this far planet, or if you want to create a new urban environment for your upcoming FPS, or if you want to create huge smurf communities (now, that sounds fun), their solution is a must have.

Lionel Barret de Nazaris, Founder & CTO of Gamr7 about the creation of procedural cities

Kynogon: dynamic NPC behavior using Kynapse

Andy Brammall is the European Sales Manager of Kynogon. As such, he is the best guy to show Kynogon's AI solution - Kynapse. You can find some demos of Kynapse on Kynogon website. The good thing is to get some explanation about what the demo really does, play with them, watch the result, and this was the subject of my query to Andy Brammall, and he kindly explained to me everything.

Andy Brammall, European Sales Manager of Kynogon about Kynapse demos

Screenshot from one of Kynogon's Kynapse demo