5 hours ago, Zakwayda said:

Hm, I didn't hear that claim made in the video. I'd probably need a timestamp or quote to know what you're referring to.

Ah, I linked the wrong video. Sorry for wasting your time there! Here is the correct link with a timestamp (explanation ends at around 3:40):

Specifically this part, at around 3:20:

Quote

"Only on the perspective projection, +1 is going out away from our view point, -1 is close, right up to our eye, and I'm pretty sure that only has to do with depth tests... most of the time in OpenGL we're right handed, once we do the perspective projection, we go to left handed."

6 hours ago, Zakwayda said:

I'm not sure why your depth test is (or was) reversed, but I'm guessing it's due to a mismatch between your view transform and/or worldspace coordinates and your projection transform convention. If switching to a LH projection solves the problem, that suggests your view transform and/or worldspace coordinates are configured (intentionally or otherwise) with the expectation of a left-handed projection.

I have set up all my z coordinates with the expectation that -1 will be coming out of the screen, while +1 is going into the screen:

std::array<float, 12> RedSquareVertices

= {

240.0F,-135.0F,-0.1F,

240.0F, 135.0F,-0.1F,

-240.0F, 135.0F,-0.1F,

-240.0F,-135.0F,-0.1F

};

std::array<float, 12> BlueSquareVertices

= {

1200.0F,405.0F,-0.2F,

1200.0F,675.0F,-0.2F,

+720.0F,675.0F,-0.2F,

+720.0F,405.0F,-0.2F,

};

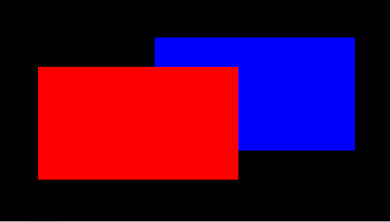

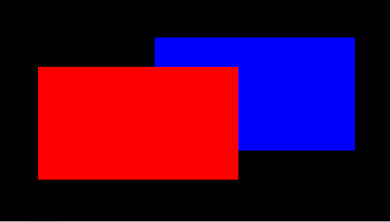

So with the above example, once the depth buffer is turned on, I would expect, as standard, for OpenGL to render the square composed of BlueSquareVertices on top of the square composed of RedSquareVertices as its z values are lesser (closer to the viewer) than its counterpart and the depth test is set to GL_LESS by default. However this isn't what happens. See below:

When using glm::ortho(left, right, bottom, top, near, far):

When using glm::orthoLH(left, right, bottom, top, near, far):

.png.93dd394b8f37aabb5c8378d0ed2cd844.png)

.png.93dd394b8f37aabb5c8378d0ed2cd844.png)