6 minutes ago, Zakwayda said:Unfortunately I'm not using Direct3D currently and haven't for quite a while, so I may not be able to help with the particulars of your problem.

I do have a suggestion though. If I were you I'd try to find a simple, self-contained tutorial with complete working source code that does the basics (renders a simple primitive with a matrix transform, etc.). Get a project working from it, copy-pasting or using the code verbatim if needed. Assuming you can get it working, then you'll have some reference code that you know works (assuming the tutorial is sound). You can then try to build your own code from there, and if things go wrong, you'll have working code to check against.

Anyway, just a suggestion. (Of course it's also possible someone who's up to speed with Direct3D will be able to answer your question here.)

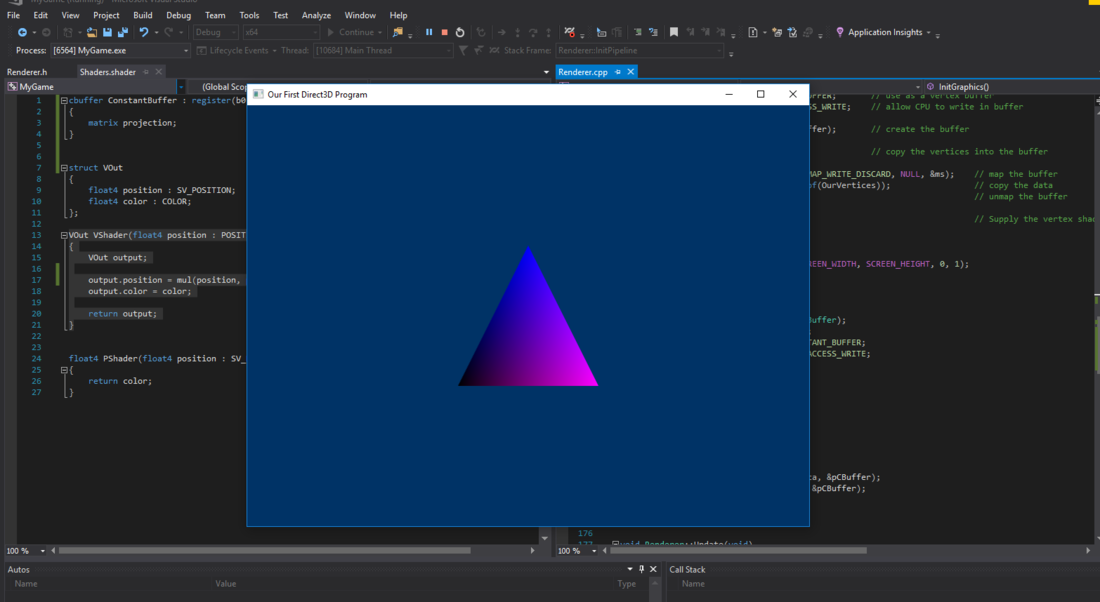

I agree on finding a self contained tutorial, this is tricker than you think it would be.

The rastertek tutorial is huge, I could probably attempt to compile it and tear it apart to get to the bottom of this mess, that could take a while..

Persistence I've found is always a large part of success in learning to program anything ![]()