PAX:

I went to PAX as an attendee and checked out a few booths and games. I was thinking about setting up a booth in the VR section, but the financial reality made that impossible. I just can't afford the booth fee (10x10 booth - $1,050). Even if they gave me an indie discount, the price puts me way out. So, out of wild curiosity, I went to check out the VR village section of PAX. How many VR developers decided to have a booth at PAX? Who showed up? What was being shown? The results were surprising and not surprising at the same time. Oculus had purchased half of the floor space and were busy giving demos of their hardware and select games. They have deep pockets, so they can afford that, whatever it costed. The rest of the booths? There were about four VR booths. One was an indie team showing off a really early and rough prototype of their unity game. It was so early and rough that it was unremarkable at best. They got lots of feedback, but spent $1,000 + 4 days for it. There was Archangel VR, a booth run by Skydance Interactive's VR division, but aside from that, there was nobody else there. Zero indie VR developers at PAX. What does that tell you? I think it means that a lot of other indie VR developers are exactly in the same position I am in and can't afford PAX, so most of the VR industry is a no-show.

VR Contract projects & 360 VR Audio:

I created a few 360 videos in VR with the film guys in my office space. I've gotten pretty good at it, so we're starting to experiment a bit more. One of the problems I have with 360 videos in VR is that it's really not the "best" use for VR. I think the inherent nature of VR requires the audience to feel like they exist within the virtual environment, and 360 VR doesn't quite sell the experience. So, we're looking at creating hybrid experiences, where we have a 360 video combined with 3D props which people can interact with. I'm also experimenting with better directional audio. I'm setting up a test demo where we have a 360 camera rig setup in a room and we also have four microphones set in known fixed positions, relative to the camera location. Then, I'm going to walk around the camera and make some audio noise so that the four microphones pick it up. Then, I'll take all four audio recordings and place sound emitters at the same relative position within VR, so when you replay the experience, each audio emitter plays their recorded track and the sound is automatically spatialized and attenuated. The cool part in all of this is that VR requires directional audio to attract the audiences attention to a point of interest. Like indirectly saying, "Hey, look over here! Something interesting is about to happen!". This does create some additional demands for video production though, because if a crew goes on site to film, they have to hide four to five microphones within the scene and save the positions relative to the camera so that it can be correctly replayed. The test is to see whether this creates enough value to make it worth the effort.

Another VR project I worked on recently was for a 360 VR video which plays like a "choose your own adventure". It's interesting, because you watch a bit of 360 video with actors following a script, something happens, a decision has to be made, and then you the audience, decide which direction the story goes, and the next segments of video resume until the next choice. I found that this has some technical problems to overcome:

1) high resolution 360 video has large file size. One minute of video is about 500mb.

2) The total size of a choose your own adventure video application is the sum of all videos. A 20 minute experience could be 20gb. I don't know how we're going to get people to download that onto their mobile phone. Video streaming would be a necessity!

3) Users have to be told that they have to interact with the video at certain segments. The interactive part of the video should loop without breaking narrative or fourth walls. This creates narrative challenges.

I tentatively think that this project is the first of its kind in the world. I've never heard of anyone else making a choose your own adventure film in VR. I think this could be a new product category and brings some interesting narrative tools to filmmakers. Branching stories are nothing new to video games, but it is new to immersive cinema. The project is a rough prototype which is seeking funding from Oculus via their launchpad program, so it may never actually see the light of day. Only time will tell, so we'll see what happens.

Spellbound Story Writing:

This month, I read the very first Harry Potter book. If Harry Potter is going to be a source of inspiration for the story/narrative, I should at least read the books so that I have a good understanding of the narrative structure and style. J.K. Rowling is a master writer and story teller, and it would be near impossible to replicate her work. However, that's not really my intent. I realized that just by reading her books through the eyes of a humble amateur writer, I picked up some new writing techniques. There was evidence of intentional design with the way the story was written and laid out (lots of setups). She certainly didn't just write a first draft, call it good and send it off to the publisher -- I got the sense that it was an intentionally crafted series of events which came through several iterative writing cycles. I think in my first draft, I'm mostly going to write out the full story with the intention of throwing it away (like a rough sketch). The goal is not to write a publishable story, but to figure out what story I want to tell. The next iteration would be a good second draft, but maybe the third, fourth, or fifth draft would be a lot closer to the final story. I think when you know what story you're trying to tell and how you're trying to tell it, the subsequent drafts become more focused on telling the story with flourish and style rather than figuring out what story to tell and how to tell it (if that makes any sense).

The immediate production goal will be to finish up the current build I'm working on, and then spend a full month doing nothing but writing out the complete story as many times as it takes to get perfect. The story will have to be complete (for the whole "Red Wizards Tale" series) so that I properly structure the events to lead into the next story. It's always easier to change elements in a previous story if you find yourself writing yourself into a corner or if the story is boring. And it's way better to figure out how to fix the flaws in a story before you commit to the game production of each episode in the story -- know where you're going rather than blindly feeling your way forward.

The interesting challenge here will be to figure out how to properly integrate VR into the story telling. So far, every story I have seen in VR has been garbage. I don't know exactly why, so I have to think long and hard about the underlying principles of VR narrative I need to use. One thing I know for certain though: The story protagonist must be the player. The story is what happens to the player, rather than something that is told to the player.

Spellbound AI:

This has been an ongoing effort on my part. I initially set out to just finish the boss monster (Sassafras) and replace the placeholder asset I currently have in game. The idea was to create a boss which does a few special abilities and generally makes life difficult for the player, as would be appropriate for a boss monster. Unfortunately, I got carried away. The characters in the game were carefully scripted expert systems based off of state machines. Everything was hard coded, so things were pretty rigid. It works. Good enough. However, every action that a character could perform was just a hard coded "thing" that happened, rather than being an ability which was used. So, I set out to change this: Everything a character does should be an "ability"/"action" which is done. The ability is character agnostic, so using an "eat" ability would be a general ability but implementation would be character specific (polymorphism all the way!). Then I got this crazy idea: Each ability is really like an output node on an artificial neural network graph. In my current case, I'm just hard coding when to execute which abilities based off of an expert system I hand crafted.

Okay. It works for a few monsters. But what happens if I want to create dozens of different monsters, all with different behavior patterns? Do I *really* want to go and code up brittle expert systems for each monster class? Or is there a way to avoid all of this future work by creating the framework for a more advanced AI system now?

I thought long and hard on how this new AI system should work. I don't want to hard code behaviors. I don't want to have an AI that needs to go through thousands of training cycles to get appropriate behavior. I want my AI system to gradually learn and get smarter. I want unscripted intelligent behaviors. So, to get all of this, I need to invent a new type of AI system. That requires creating a model for intelligence. I think I got it. Here's a rough outline on how it will work:

Input Feed:

A list of interactable objects (food, characters, doors, walls, ladders, etc).

State of self (health, stamina)

Hazards

Decision Graph:

Given the list of known inputs, choose the best action to perform.

The best action is the output with the best reward.

Graph Construction:

The list of outputs is going to be created based on the list of inputs provided. We are going to create a list of possible final outputs.

Then, we're going to create a weighted graph from our current state to the final output state.

The weighting will be determined only by time cost (with travel time included).

Then, we're going to evaluate the traversal cost of each final output node. This is done by summing the costs of each node traversed.

The final output node with the best cost to reward ratio will become the "end goal".

The chosen action will be the first step towards the end goal node.

The graph is reconstructed only when the input feed items changed (added or removed). After reconstruction, the graph is re-evaluated.

Outputs:

A list of possible actions

Final Output:

A chosen action

So, we have a graph which is constructed based off of input nodes (senses) and output nodes (possible actions) and the input nodes are connected to the output nodes via a series of necessary interactions. The final output will be determined by the best cost vs. reward ratio. The key to note is that the "cost" comes from the graph itself, but the "reward" value is going to be a variable function based off of the creatures brain value system.

The graph will be like a template framework which brains use to determine the best course of action at any given point in time. The individual personality / behavior patterns will be created by tweaking a bunch of personality parameters which give various outcomes different reward weights. So, you can take the same graph, with outcomes which have the same weights, but the chosen outcome is going to vary by which brain is evaluating the rewards of each outcome! The brain of a goblin will choose different actions from the same weighted graph which a zombie would!

The only unknown right now is "how does a creature do long term planning via evaluating a chain of consequences?"

Anyways, a significant part of this month has been focused on creating the input layers and output layers of this graph. I have created the abilities system which can be represented as output nodes, and I'm currently creating the sensory input systems which are represented as input nodes. The current senses I am working on are:

-Eye sight

-Hearing

-Smell

Eye sight is pretty straight forward: You just attach a vision cone to a creatures head and feed the creature a list of all interactable objects which overlap the cone.

Hearing is a bit challenging. I actually want my characters to listen for sounds and to interpret and identify them. The end goal is to have the players microphone listening for the players voice, so if the player is talking in the graveyard or screams at the sight of a zombie, nearby monsters will hear the player making noise and be alerted to their position.

Smell is going to be an interesting sense. I will attach "smell emitters" as a component to objects. Every second, the object emits a "smell" which is represented as a slowly expanding sphere. Each smell sphere will be linked to the next smell sphere, so it'll be sort of like a linked list. If a smell sphere expands so much that a smell is sufficiently dissipated, then it is no longer "sensable" and will get destroyed. In effect, a player walking around the graveyard will emit a trail of "fresh meat" smells which zombies will latch onto and follow like blood hounds.

A lot of this is currently very much still in the "concept" phase of development, so I may be completely wrong on some ideas and approaches. However, I think the concept for this type of AI is a bit different from anything else I've seen (though, I am ignorant at what others have attempted so this may just be a reinvention of the wheel). If all goes well, I'll have a generalized AI system which can be used for all creatures, and behavior becomes an emergent property of a brain with preset value systems. When the scope of my game expands, I'll have intelligent entities which learn to function within the environment with minimal extra work on my part.

I think once I have a fleshed out version of this AI system working, I'll write a comprehensive article detailing it out so other people can replicate it.

Startup Week:

Yesterday I gave a bunch of demos to the public during Startup week in Seattle. Everyone who played the old build was amazed by the tech, visuals and interaction systems. I wanted to say, "You think that's cool? Just wait until you see what's coming next...". I have slowly come to realize that my interface system is not easy enough for people to use. I still have to instruct people on how to play the game, so that means my game is not good enough.

Trademark Conflicts:

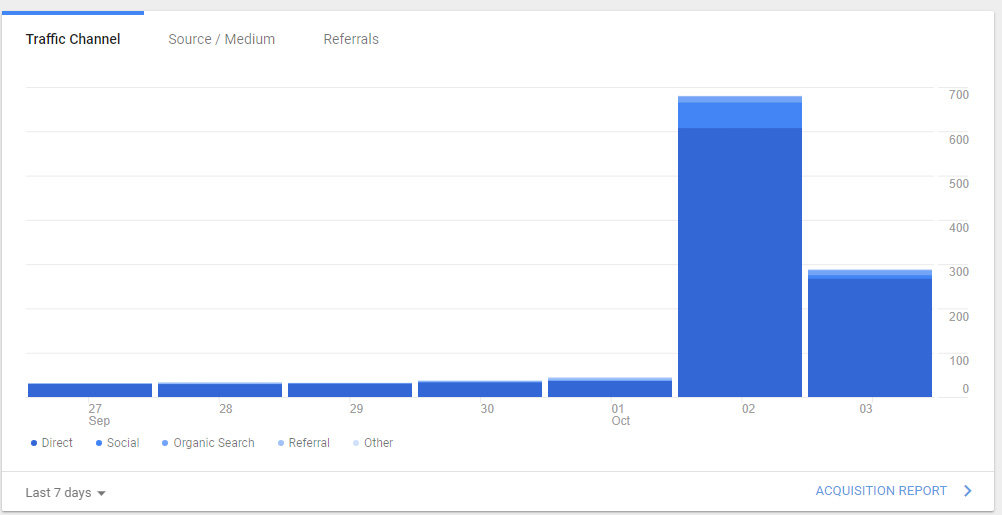

Today, it finally happened. I had the first trademark name conflict. On occasion, I look at the traffic to my Steam page via google analytics to see if there are any changes. Normally, the page averages about 30 visitors a day (That's pretty bad!). Two days ago, traffic went up to 650 visitors in one day. WTF?

I did absolutely nothing to get this traffic, despite any intentional efforts. What happened? Why were people suddenly looking at my store page? Did I get a big break? Did I get surprise press coverage without knowing it? Did a social media page promote my game without my awareness? Did a popular youtube video go up? A kindly worded Reddit post somewhere? Was the Seattle Startup week more positive exposure than I expected? Why did traffic spike?

It turns out that PC Gamer had released an article about a game currently in development by Chucklefish. The game looks like a version of Stardew Valley, but involving magic. Their internal name for the game? "Spellbound". This got coverage on Reddit with about 950 upvotes, so people were really excited about this game in development. As a result, people went to steam and started searching for "Spellbound", trying to find the store page for the game, but instead stumbled onto my store page. Uh oh... consumers are confused! And also, "Yikes!". What happens if Chucklefish releases their game in a month or two, brands it as "Spellbound", pushes a lot of marketing material promoting that branding, and then they realize that my game is getting confused with theirs and getting blow off traffic? Then their legal team decides to file a trademark to protect their name and then issues a "cease and desist" order to me, forcing me to give up my game name even though I had it first? Oh no... that would be a complete disaster for me because I've been using the name for over a year and it's the name my customers are familiar with, and all of my hard fought efforts would be undermined. It would be extremely disheartening.

So, today I learned all about Trademarks. I filed a trademark application with the US patent office to protect the name of my brand. I then sent a very kindly worded email to Chucklefish to inform them about the naming conflict. In hindsight, I should have done this over a year ago so that other companies could look up the name in the trademark database before choosing the name and avoid conflicts. Completely my fault! Let this be a lesson to you guys: Before you name your game, check to see if its trademarked, and if its not, trademark it! Copyrights apply to intellectual property, trademarks apply to brands.

I expect this will never turn into an actual problem, so I'm not worried, but I did file a trademark as a precautionary measure. This is an area you would want to be pro-active in, rather than being reactive like I am.

Your description of an AI decision graph sounds a lot like Behavior Trees. Is that something that you've looked into at all? I know you're in Unreal 4, I believe it's got some form of built in support for them. Or do you see there being some core difference between the two concepts?